|

velo2cam_calibration package from velo2cam_calibration repovelo2cam_calibration |

Package Summary

| Tags | No category tags. |

| Version | 1.0.1 |

| License | GPLv2 |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Description | Automatic Extrinsic Calibration Method for LiDAR and Camera Sensor Setups. ROS Package. |

| Checkout URI | https://github.com/beltransen/velo2cam_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2022-03-16 |

| Dev Status | MAINTAINED |

| CI status | Continuous Integration : 0 / 0 |

| Released | UNRELEASED |

| Tags | automatic-calibration-algorithm |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Package Description

Additional Links

Maintainers

- Jorge Beltran

- Carlos Guindel

Authors

- Jorge Beltran

- Carlos Guindel

velo2cam_calibration

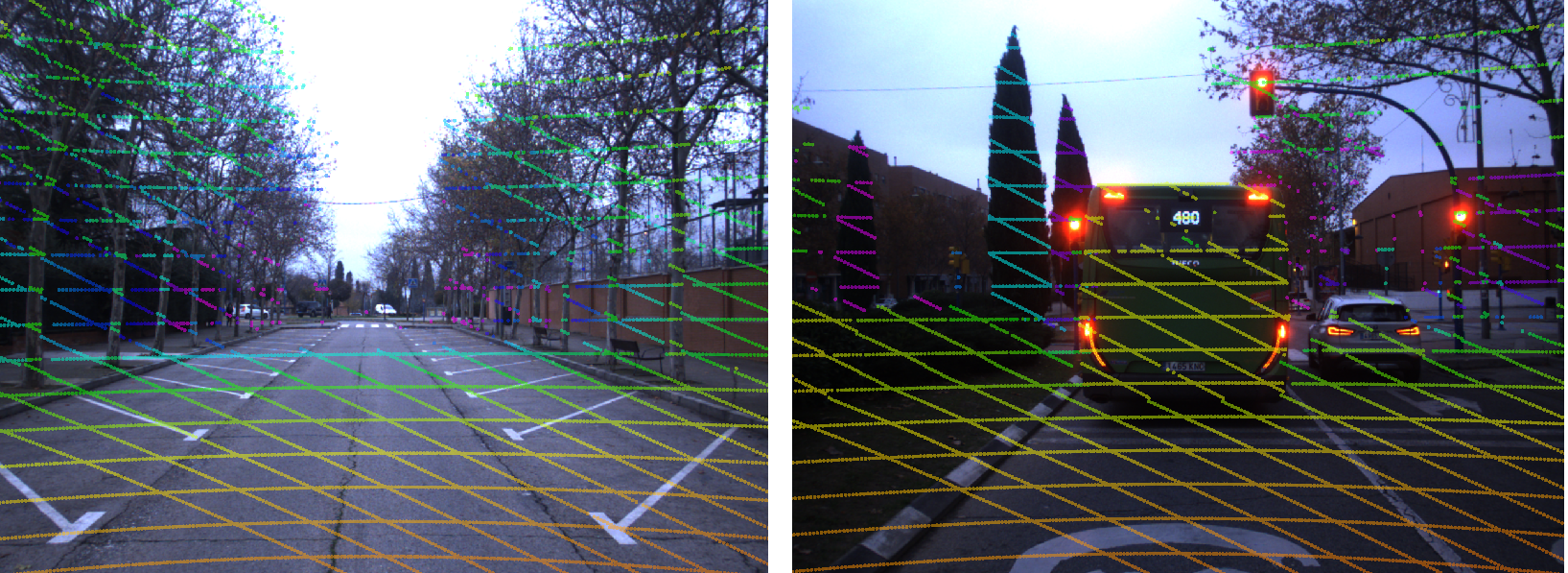

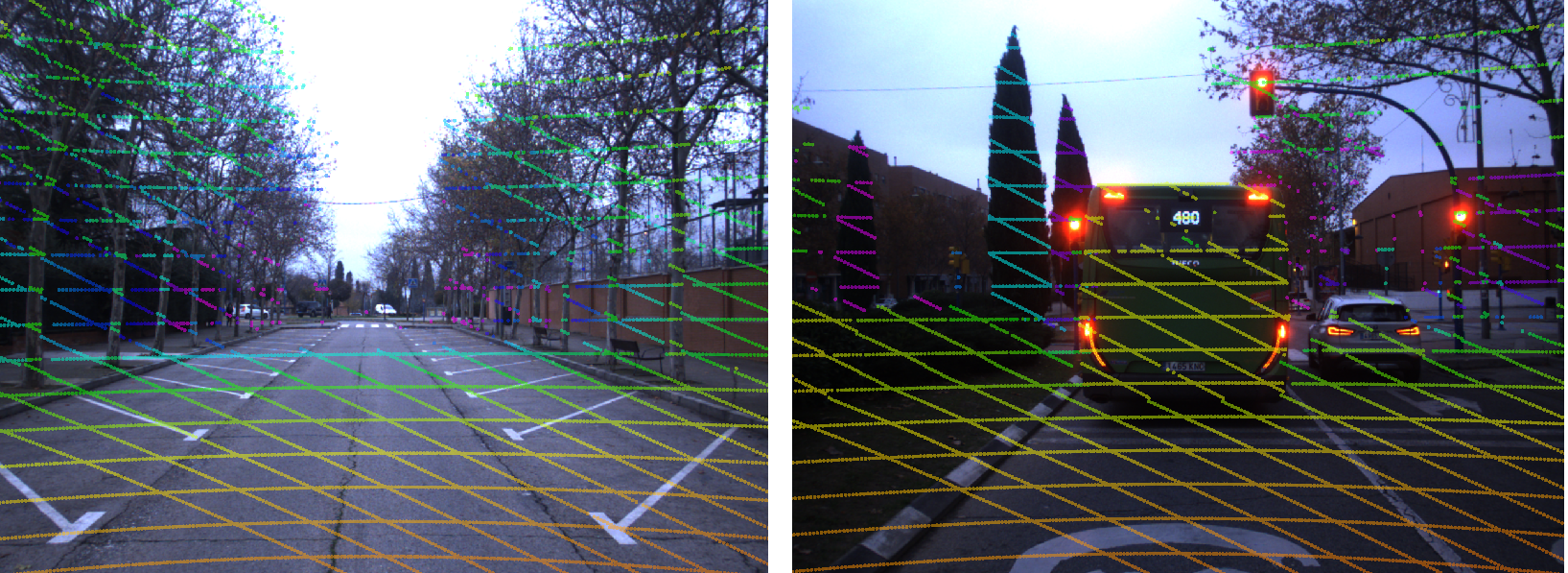

The velo2cam_calibration software implements a state-of-the-art automatic calibration algorithm for pair of sensors composed of LiDAR and camera devices in any possible combination, as described in this paper:

Automatic Extrinsic Calibration Method for LiDAR and Camera Sensor Setups

Jorge Beltrán, Carlos Guindel, Arturo de la Escalera, Fernando García

IEEE Transactions on Intelligent Transportation Systems, 2022

[Paper] [Preprint]

Setup

This software is provided as a ROS package. To install:

- Clone the repository into your catkin_ws/src/ folder.

- Install run dependencies:

sudo apt-get install ros-<distro>-opencv-apps - Build your workspace as usual.

Usage

See HOWTO.md for detailed instructions on how to use this software.

To test the algorithm in a virtual environment, you can launch any of the calibration scenarios included in our Gazebo validation suite.

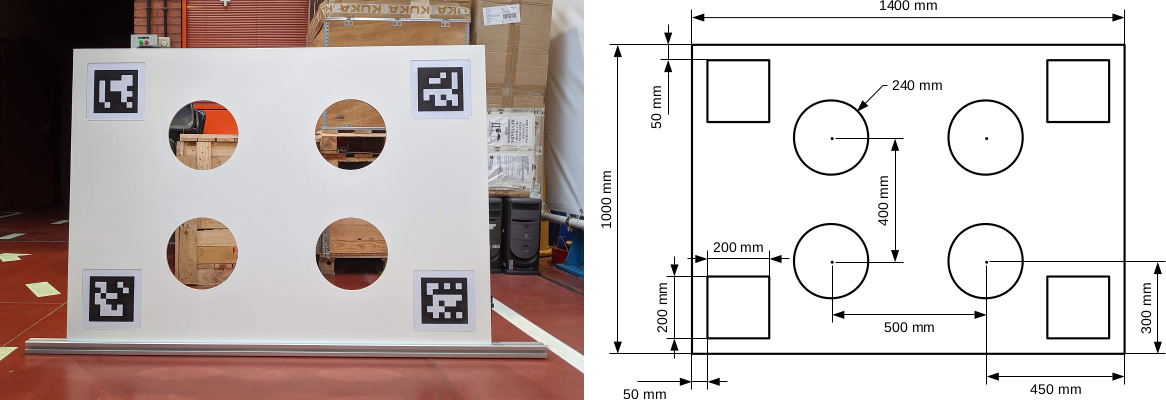

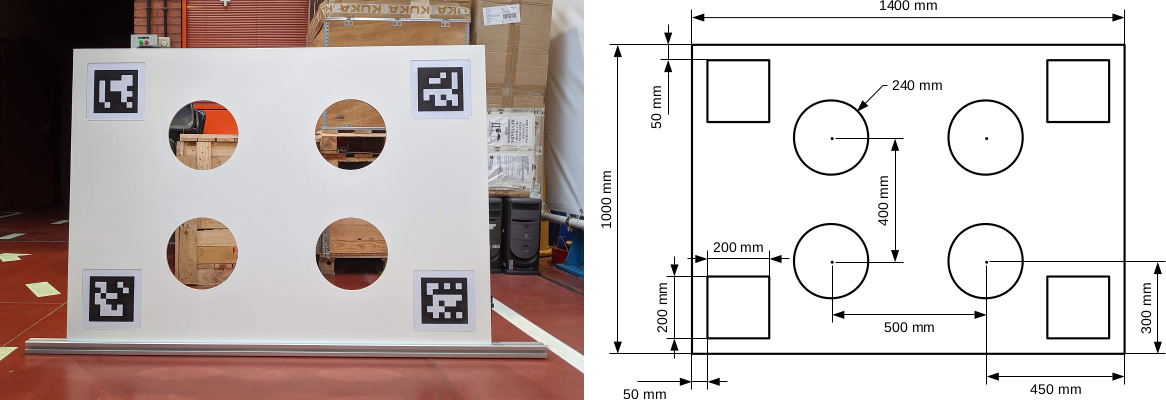

Calibration target

The following picture shows a possible embodiment of the proposed calibration target used by this algorithm and its corresponding dimensional drawing.

Note: Other size may be used for convenience. If so, please configure node parameters accordingly.

Citation

If you use this work in your research, please consider citing the following paper:

@article{beltran2022,

author={Beltrán, Jorge and Guindel, Carlos and de la Escalera, Arturo and García, Fernando},

journal={IEEE Transactions on Intelligent Transportation Systems},

title={Automatic Extrinsic Calibration Method for LiDAR and Camera Sensor Setups},

year={2022},

doi={10.1109/TITS.2022.3155228}

}

A previous version of this tool is available here and was described on this paper.

Wiki Tutorials

Package Dependencies

| Deps | Name |

|---|---|

| cv_bridge | |

| image_transport | |

| message_filters | |

| roscpp | |

| sensor_msgs | |

| std_msgs | |

| stereo_msgs | |

| tf | |

| tf_conversions | |

| image_geometry | |

| pcl_ros | |

| dynamic_reconfigure | |

| cmake_modules | |

| catkin |

System Dependencies

| Name |

|---|

| tinyxml |

Dependant Packages

Launch files

- launch/registration.launch

-

- sensor1_type [default: lidar]

- sensor2_type [default: mono]

- sensor1_id [default: 0]

- sensor2_id [default: 0]

- launch/lidar_pattern.launch

-

- stdout [default: screen]

- cloud_topic [default: velodyne_points]

- sensor_id [default: 0]

- launch/stereo_pattern.launch

-

- stdout [default: screen]

- camera_name [default: /stereo_camera]

- image_topic [default: image_rect_color]

- frame_name [default: stereo_camera]

- sensor_id [default: 0]

- launch/mono_pattern.launch

-

- stdout [default: screen]

- camera_name [default: /mono_camera]

- image_topic [default: image_color]

- frame_name [default: mono_camera]

- sensor_id [default: 0]

Messages

Services

Plugins

Recent questions tagged velo2cam_calibration at Robotics Stack Exchange

|

velo2cam_calibration package from velo2cam_calibration repovelo2cam_calibration |

Package Summary

| Tags | No category tags. |

| Version | 1.0.1 |

| License | GPLv2 |

| Build type | CATKIN |

| Use | RECOMMENDED |

Repository Summary

| Description | Automatic Extrinsic Calibration Method for LiDAR and Camera Sensor Setups. ROS Package. |

| Checkout URI | https://github.com/beltransen/velo2cam_calibration.git |

| VCS Type | git |

| VCS Version | master |

| Last Updated | 2022-03-16 |

| Dev Status | MAINTAINED |

| CI status | Continuous Integration : 0 / 0 |

| Released | UNRELEASED |

| Tags | automatic-calibration-algorithm |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Package Description

Additional Links

Maintainers

- Jorge Beltran

- Carlos Guindel

Authors

- Jorge Beltran

- Carlos Guindel

velo2cam_calibration

The velo2cam_calibration software implements a state-of-the-art automatic calibration algorithm for pair of sensors composed of LiDAR and camera devices in any possible combination, as described in this paper:

Automatic Extrinsic Calibration Method for LiDAR and Camera Sensor Setups

Jorge Beltrán, Carlos Guindel, Arturo de la Escalera, Fernando García

IEEE Transactions on Intelligent Transportation Systems, 2022

[Paper] [Preprint]

Setup

This software is provided as a ROS package. To install:

- Clone the repository into your catkin_ws/src/ folder.

- Install run dependencies:

sudo apt-get install ros-<distro>-opencv-apps - Build your workspace as usual.

Usage

See HOWTO.md for detailed instructions on how to use this software.

To test the algorithm in a virtual environment, you can launch any of the calibration scenarios included in our Gazebo validation suite.

Calibration target

The following picture shows a possible embodiment of the proposed calibration target used by this algorithm and its corresponding dimensional drawing.

Note: Other size may be used for convenience. If so, please configure node parameters accordingly.

Citation

If you use this work in your research, please consider citing the following paper:

@article{beltran2022,

author={Beltrán, Jorge and Guindel, Carlos and de la Escalera, Arturo and García, Fernando},

journal={IEEE Transactions on Intelligent Transportation Systems},

title={Automatic Extrinsic Calibration Method for LiDAR and Camera Sensor Setups},

year={2022},

doi={10.1109/TITS.2022.3155228}

}

A previous version of this tool is available here and was described on this paper.

Wiki Tutorials

Package Dependencies

| Deps | Name |

|---|---|

| cv_bridge | |

| image_transport | |

| message_filters | |

| roscpp | |

| sensor_msgs | |

| std_msgs | |

| stereo_msgs | |

| tf | |

| tf_conversions | |

| image_geometry | |

| pcl_ros | |

| dynamic_reconfigure | |

| cmake_modules | |

| catkin |

System Dependencies

| Name |

|---|

| tinyxml |

Dependant Packages

Launch files

- launch/registration.launch

-

- sensor1_type [default: lidar]

- sensor2_type [default: mono]

- sensor1_id [default: 0]

- sensor2_id [default: 0]

- launch/lidar_pattern.launch

-

- stdout [default: screen]

- cloud_topic [default: velodyne_points]

- sensor_id [default: 0]

- launch/stereo_pattern.launch

-

- stdout [default: screen]

- camera_name [default: /stereo_camera]

- image_topic [default: image_rect_color]

- frame_name [default: stereo_camera]

- sensor_id [default: 0]

- launch/mono_pattern.launch

-

- stdout [default: screen]

- camera_name [default: /mono_camera]

- image_topic [default: image_color]

- frame_name [default: mono_camera]

- sensor_id [default: 0]