|

aruco_ros repositoryrobot robotics aruco ros aruco-library fiducial-marker aruco-detector aruco-marker-detection aruco-ros fiducial-marker-detector aruco aruco_msgs aruco_ros |

|

|

Repository Summary

| Description | Software package and ROS wrappers of the Aruco Augmented Reality marker detector library |

| Checkout URI | https://github.com/pal-robotics/aruco_ros.git |

| VCS Type | git |

| VCS Version | humble-devel |

| Last Updated | 2025-04-10 |

| Dev Status | DEVELOPED |

| CI status | No Continuous Integration |

| Released | RELEASED |

| Tags | robot robotics aruco ros aruco-library fiducial-marker aruco-detector aruco-marker-detection aruco-ros fiducial-marker-detector |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Packages

| Name | Version |

|---|---|

| aruco | 5.0.5 |

| aruco_msgs | 5.0.5 |

| aruco_ros | 5.0.5 |

README

aruco_ros

Software package and ROS wrappers of the Aruco Augmented Reality marker detector library.

Features

-

High-framerate tracking of AR markers

-

Generate AR markers with given size and optimized for minimal perceptive ambiguity (when there are more markers to track)

-

Enhanced precision tracking by using boards of markers

-

ROS wrappers

Applications

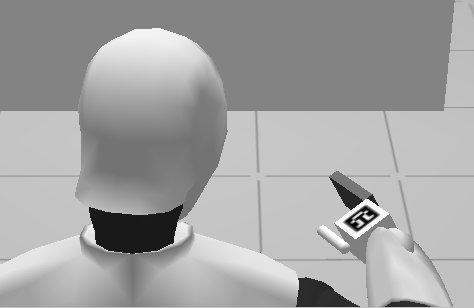

- Object pose estimation

- Visual servoing: track object and hand at the same time

ROS API

Messages

-

aruco_ros/Marker.msg

Header header uint32 id geometry_msgs/PoseWithCovariance pose float64 confidence -

aruco_ros/MarkerArray.msg

Header header aruco_ros/Marker[] markers

Kinetic changes

-

Updated the Aruco library to version 3.0.4

-

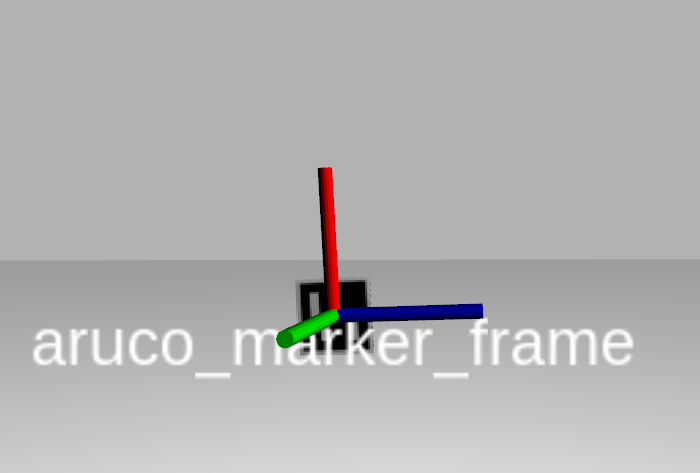

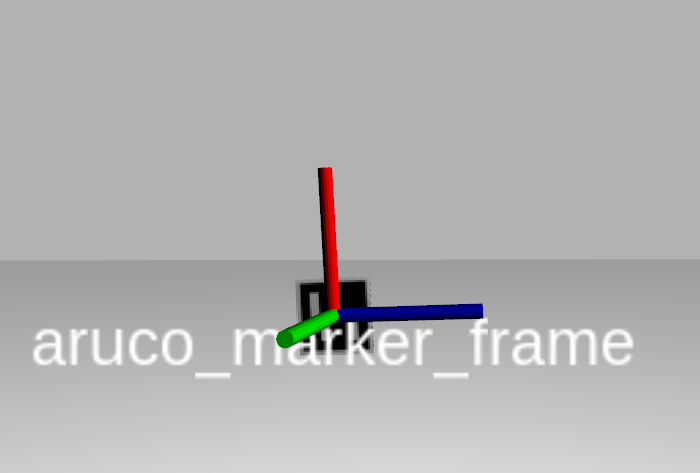

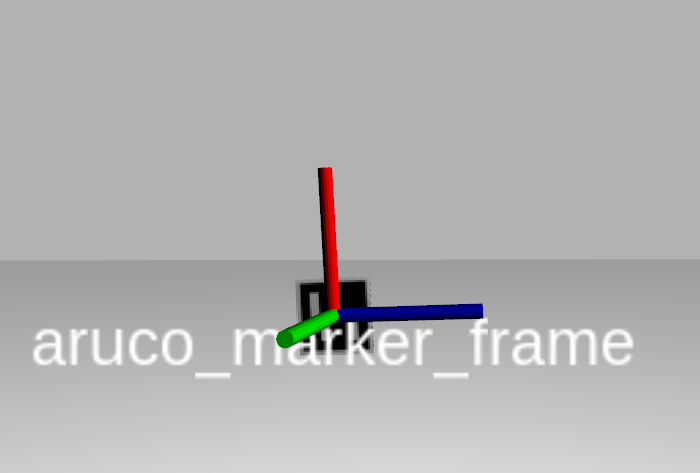

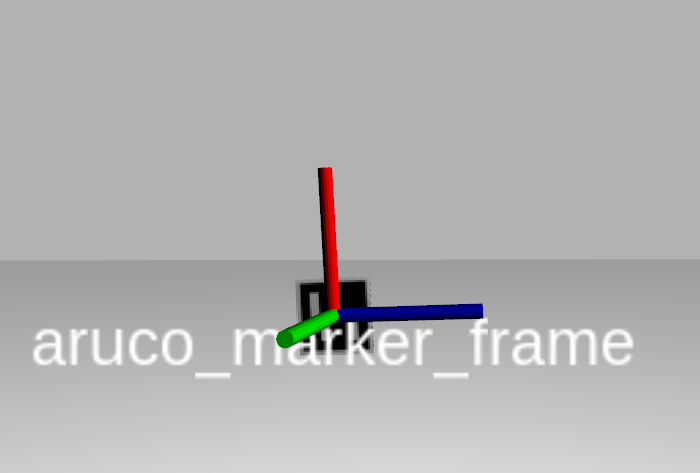

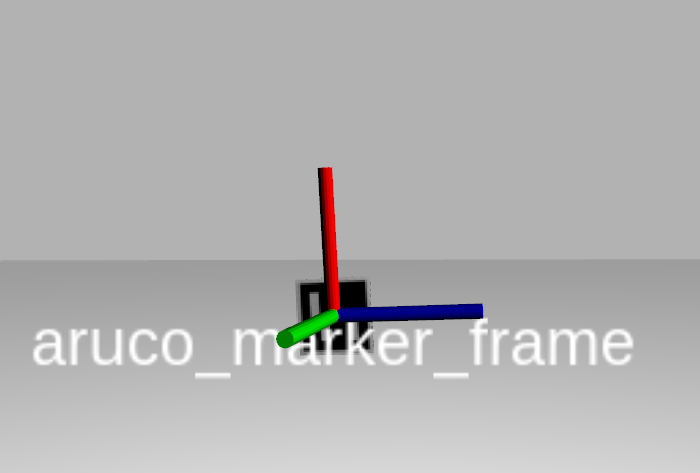

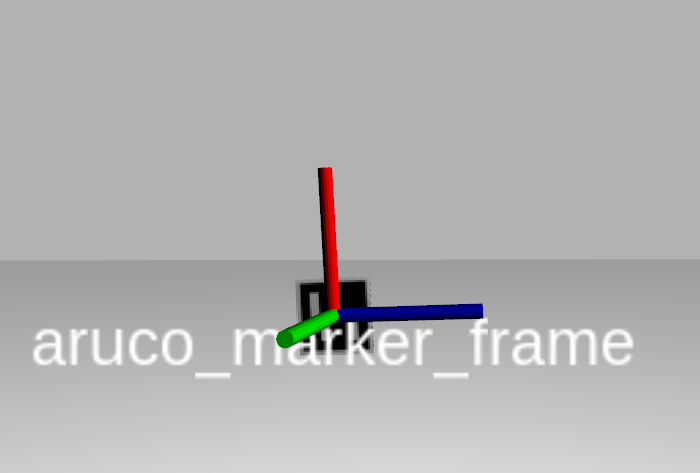

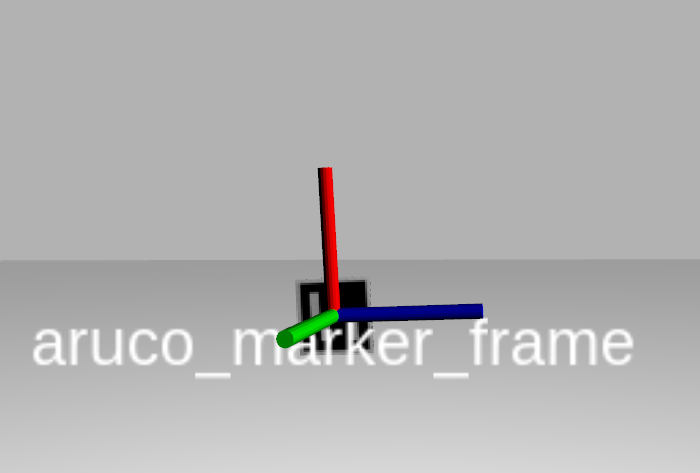

Changed the coordinate system to match the library’s, the convention is shown in the image below, following rviz conventions, X is red, Y is green and Z is blue.

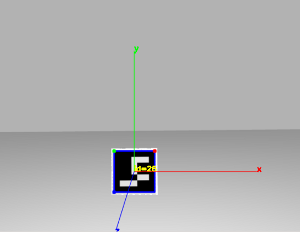

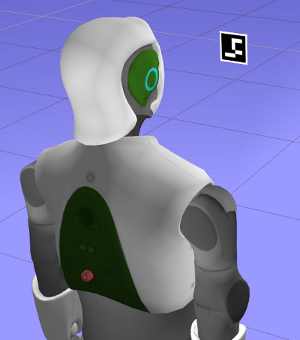

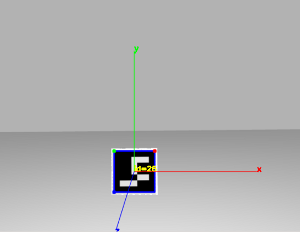

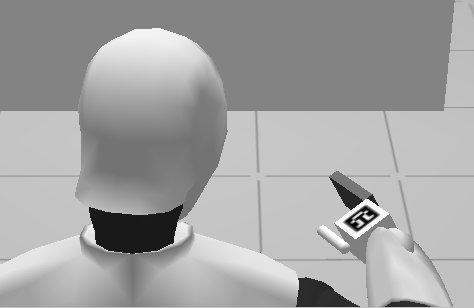

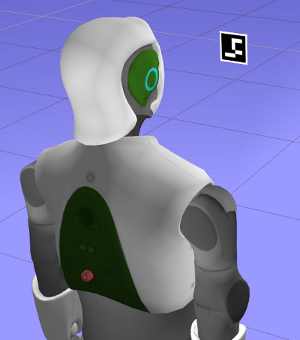

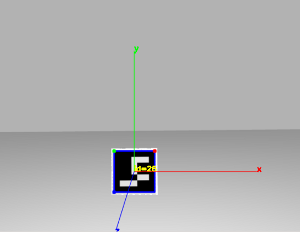

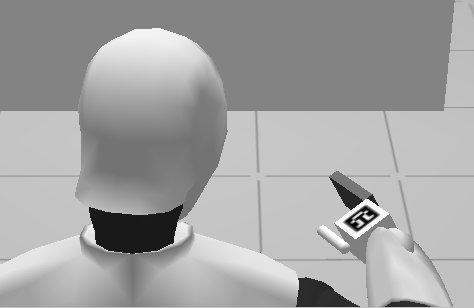

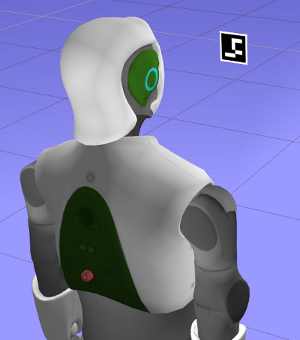

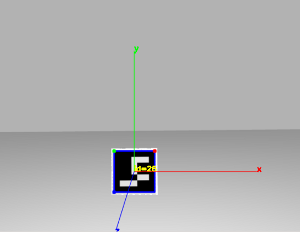

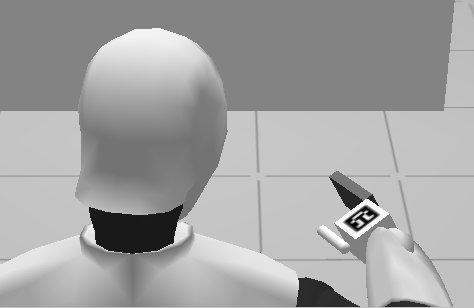

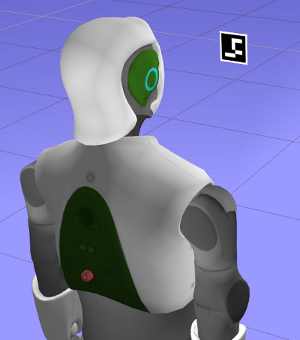

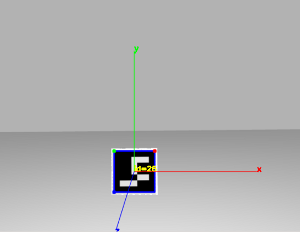

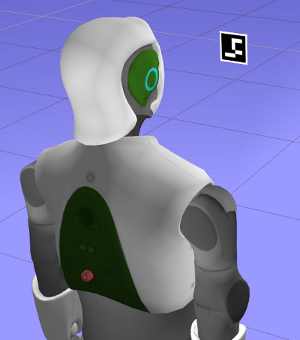

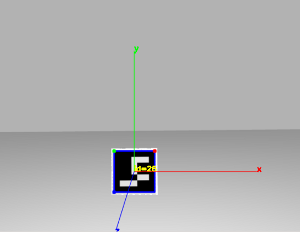

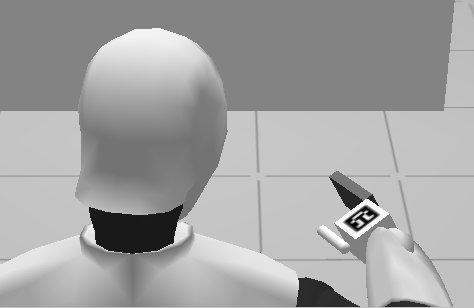

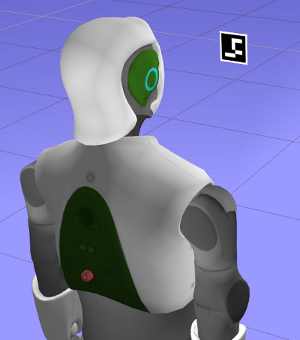

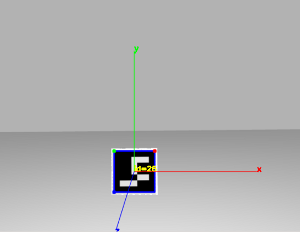

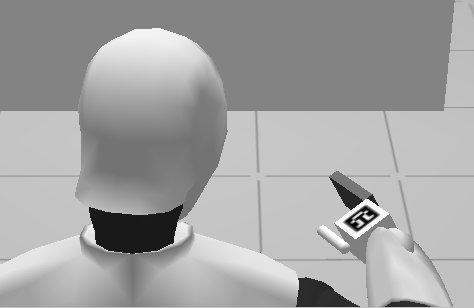

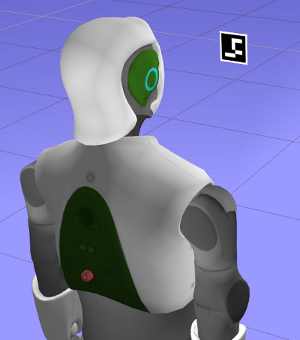

Test it with REEM

- Open a REEM in simulation with a marker floating in front of the robot. This will start the stereo cameras of the robot too. Since this is only a vision test, there is nothing else in this world apart from the robot and a marker floating in front of it. An extra light source had to be added to compensate for the default darkness.

roslaunch reem_gazebo reem_gazebo.launch world:=floating_marker

- Launch the

image_procnode to get undistorted images from the cameras of the robot.

ROS_NAMESPACE=/stereo/right rosrun image_proc image_proc image_raw:=image

- Start the

singlenode which will start tracking the specified marker and will publish its pose in the camera frame

roslaunch aruco_ros single.launch markerId:=26 markerSize:=0.08 eye:="right"

the frame in which the pose is refered to can be chosen with the 'ref_frame' argument. The next example forces the marker pose to

be published with respect to the robot base_link frame:

roslaunch aruco_ros single.launch markerId:=26 markerSize:=0.08 eye:="right" ref_frame:=/base_link

- Visualize the result

rosrun image_view image_view image:=/aruco_single/result

CONTRIBUTING

|

aruco_ros repositoryrobot robotics aruco ros aruco-library fiducial-marker aruco-detector aruco-marker-detection aruco-ros fiducial-marker-detector aruco aruco_msgs aruco_ros |

|

|

Repository Summary

| Description | Software package and ROS wrappers of the Aruco Augmented Reality marker detector library |

| Checkout URI | https://github.com/pal-robotics/aruco_ros.git |

| VCS Type | git |

| VCS Version | humble-devel |

| Last Updated | 2025-04-10 |

| Dev Status | DEVELOPED |

| CI status | No Continuous Integration |

| Released | RELEASED |

| Tags | robot robotics aruco ros aruco-library fiducial-marker aruco-detector aruco-marker-detection aruco-ros fiducial-marker-detector |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Packages

| Name | Version |

|---|---|

| aruco | 5.0.5 |

| aruco_msgs | 5.0.5 |

| aruco_ros | 5.0.5 |

README

aruco_ros

Software package and ROS wrappers of the Aruco Augmented Reality marker detector library.

Features

-

High-framerate tracking of AR markers

-

Generate AR markers with given size and optimized for minimal perceptive ambiguity (when there are more markers to track)

-

Enhanced precision tracking by using boards of markers

-

ROS wrappers

Applications

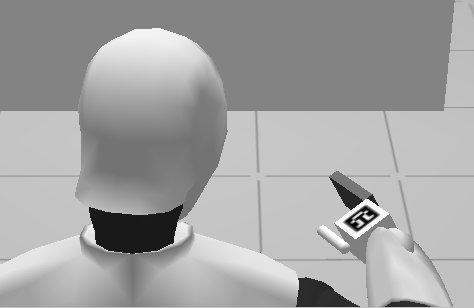

- Object pose estimation

- Visual servoing: track object and hand at the same time

ROS API

Messages

-

aruco_ros/Marker.msg

Header header uint32 id geometry_msgs/PoseWithCovariance pose float64 confidence -

aruco_ros/MarkerArray.msg

Header header aruco_ros/Marker[] markers

Kinetic changes

-

Updated the Aruco library to version 3.0.4

-

Changed the coordinate system to match the library’s, the convention is shown in the image below, following rviz conventions, X is red, Y is green and Z is blue.

Test it with REEM

- Open a REEM in simulation with a marker floating in front of the robot. This will start the stereo cameras of the robot too. Since this is only a vision test, there is nothing else in this world apart from the robot and a marker floating in front of it. An extra light source had to be added to compensate for the default darkness.

roslaunch reem_gazebo reem_gazebo.launch world:=floating_marker

- Launch the

image_procnode to get undistorted images from the cameras of the robot.

ROS_NAMESPACE=/stereo/right rosrun image_proc image_proc image_raw:=image

- Start the

singlenode which will start tracking the specified marker and will publish its pose in the camera frame

roslaunch aruco_ros single.launch markerId:=26 markerSize:=0.08 eye:="right"

the frame in which the pose is refered to can be chosen with the 'ref_frame' argument. The next example forces the marker pose to

be published with respect to the robot base_link frame:

roslaunch aruco_ros single.launch markerId:=26 markerSize:=0.08 eye:="right" ref_frame:=/base_link

- Visualize the result

rosrun image_view image_view image:=/aruco_single/result

CONTRIBUTING

|

aruco_ros repositoryrobot robotics aruco ros aruco-library fiducial-marker aruco-detector aruco-marker-detection aruco-ros fiducial-marker-detector aruco aruco_msgs aruco_ros |

|

|

Repository Summary

| Description | Software package and ROS wrappers of the Aruco Augmented Reality marker detector library |

| Checkout URI | https://github.com/pal-robotics/aruco_ros.git |

| VCS Type | git |

| VCS Version | humble-devel |

| Last Updated | 2025-04-10 |

| Dev Status | DEVELOPED |

| CI status | No Continuous Integration |

| Released | RELEASED |

| Tags | robot robotics aruco ros aruco-library fiducial-marker aruco-detector aruco-marker-detection aruco-ros fiducial-marker-detector |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Packages

| Name | Version |

|---|---|

| aruco | 5.0.5 |

| aruco_msgs | 5.0.5 |

| aruco_ros | 5.0.5 |

README

aruco_ros

Software package and ROS wrappers of the Aruco Augmented Reality marker detector library.

Features

-

High-framerate tracking of AR markers

-

Generate AR markers with given size and optimized for minimal perceptive ambiguity (when there are more markers to track)

-

Enhanced precision tracking by using boards of markers

-

ROS wrappers

Applications

- Object pose estimation

- Visual servoing: track object and hand at the same time

ROS API

Messages

-

aruco_ros/Marker.msg

Header header uint32 id geometry_msgs/PoseWithCovariance pose float64 confidence -

aruco_ros/MarkerArray.msg

Header header aruco_ros/Marker[] markers

Kinetic changes

-

Updated the Aruco library to version 3.0.4

-

Changed the coordinate system to match the library’s, the convention is shown in the image below, following rviz conventions, X is red, Y is green and Z is blue.

Test it with REEM

- Open a REEM in simulation with a marker floating in front of the robot. This will start the stereo cameras of the robot too. Since this is only a vision test, there is nothing else in this world apart from the robot and a marker floating in front of it. An extra light source had to be added to compensate for the default darkness.

roslaunch reem_gazebo reem_gazebo.launch world:=floating_marker

- Launch the

image_procnode to get undistorted images from the cameras of the robot.

ROS_NAMESPACE=/stereo/right rosrun image_proc image_proc image_raw:=image

- Start the

singlenode which will start tracking the specified marker and will publish its pose in the camera frame

roslaunch aruco_ros single.launch markerId:=26 markerSize:=0.08 eye:="right"

the frame in which the pose is refered to can be chosen with the 'ref_frame' argument. The next example forces the marker pose to

be published with respect to the robot base_link frame:

roslaunch aruco_ros single.launch markerId:=26 markerSize:=0.08 eye:="right" ref_frame:=/base_link

- Visualize the result

rosrun image_view image_view image:=/aruco_single/result

CONTRIBUTING

|

aruco_ros repositoryrobot robotics aruco ros aruco-library fiducial-marker aruco-detector aruco-marker-detection aruco-ros fiducial-marker-detector aruco aruco_msgs aruco_ros |

|

|

Repository Summary

| Description | Software package and ROS wrappers of the Aruco Augmented Reality marker detector library |

| Checkout URI | https://github.com/pal-robotics/aruco_ros.git |

| VCS Type | git |

| VCS Version | humble-devel |

| Last Updated | 2025-04-10 |

| Dev Status | DEVELOPED |

| CI status | No Continuous Integration |

| Released | RELEASED |

| Tags | robot robotics aruco ros aruco-library fiducial-marker aruco-detector aruco-marker-detection aruco-ros fiducial-marker-detector |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Packages

| Name | Version |

|---|---|

| aruco | 5.0.5 |

| aruco_msgs | 5.0.5 |

| aruco_ros | 5.0.5 |

README

aruco_ros

Software package and ROS wrappers of the Aruco Augmented Reality marker detector library.

Features

-

High-framerate tracking of AR markers

-

Generate AR markers with given size and optimized for minimal perceptive ambiguity (when there are more markers to track)

-

Enhanced precision tracking by using boards of markers

-

ROS wrappers

Applications

- Object pose estimation

- Visual servoing: track object and hand at the same time

ROS API

Messages

-

aruco_ros/Marker.msg

Header header uint32 id geometry_msgs/PoseWithCovariance pose float64 confidence -

aruco_ros/MarkerArray.msg

Header header aruco_ros/Marker[] markers

Kinetic changes

-

Updated the Aruco library to version 3.0.4

-

Changed the coordinate system to match the library’s, the convention is shown in the image below, following rviz conventions, X is red, Y is green and Z is blue.

Test it with REEM

- Open a REEM in simulation with a marker floating in front of the robot. This will start the stereo cameras of the robot too. Since this is only a vision test, there is nothing else in this world apart from the robot and a marker floating in front of it. An extra light source had to be added to compensate for the default darkness.

roslaunch reem_gazebo reem_gazebo.launch world:=floating_marker

- Launch the

image_procnode to get undistorted images from the cameras of the robot.

ROS_NAMESPACE=/stereo/right rosrun image_proc image_proc image_raw:=image

- Start the

singlenode which will start tracking the specified marker and will publish its pose in the camera frame

roslaunch aruco_ros single.launch markerId:=26 markerSize:=0.08 eye:="right"

the frame in which the pose is refered to can be chosen with the 'ref_frame' argument. The next example forces the marker pose to

be published with respect to the robot base_link frame:

roslaunch aruco_ros single.launch markerId:=26 markerSize:=0.08 eye:="right" ref_frame:=/base_link

- Visualize the result

rosrun image_view image_view image:=/aruco_single/result

CONTRIBUTING

|

aruco_ros repositoryrobot robotics aruco ros aruco-library fiducial-marker aruco-detector aruco-marker-detection aruco-ros fiducial-marker-detector |

|

|

|

aruco_ros repositoryrobot robotics aruco ros aruco-library fiducial-marker aruco-detector aruco-marker-detection aruco-ros fiducial-marker-detector aruco aruco_msgs aruco_ros |

|

|

Repository Summary

| Description | Software package and ROS wrappers of the Aruco Augmented Reality marker detector library |

| Checkout URI | https://github.com/pal-robotics/aruco_ros.git |

| VCS Type | git |

| VCS Version | noetic-devel |

| Last Updated | 2023-09-21 |

| Dev Status | DEVELOPED |

| CI status | No Continuous Integration |

| Released | RELEASED |

| Tags | robot robotics aruco ros aruco-library fiducial-marker aruco-detector aruco-marker-detection aruco-ros fiducial-marker-detector |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Packages

| Name | Version |

|---|---|

| aruco | 3.1.4 |

| aruco_msgs | 3.1.4 |

| aruco_ros | 3.1.4 |

README

aruco_ros

Software package and ROS wrappers of the Aruco Augmented Reality marker detector library.

Features

-

High-framerate tracking of AR markers

-

Generate AR markers with given size and optimized for minimal perceptive ambiguity (when there are more markers to track)

-

Enhanced precision tracking by using boards of markers

-

ROS wrappers

Applications

- Object pose estimation

- Visual servoing: track object and hand at the same time

ROS API

Messages

-

aruco_ros/Marker.msg

Header header uint32 id geometry_msgs/PoseWithCovariance pose float64 confidence -

aruco_ros/MarkerArray.msg

Header header aruco_ros/Marker[] markers

Kinetic changes

-

Updated the Aruco library to version 3.0.4

-

Changed the coordinate system to match the library’s, the convention is shown in the image below, following rviz conventions, X is red, Y is green and Z is blue.

Test it with REEM

- Open a REEM in simulation with a marker floating in front of the robot. This will start the stereo cameras of the robot too. Since this is only a vision test, there is nothing else in this world apart from the robot and a marker floating in front of it. An extra light source had to be added to compensate for the default darkness.

roslaunch reem_gazebo reem_gazebo.launch world:=floating_marker

- Launch the

image_procnode to get undistorted images from the cameras of the robot.

ROS_NAMESPACE=/stereo/right rosrun image_proc image_proc image_raw:=image

- Start the

singlenode which will start tracking the specified marker and will publish its pose in the camera frame

roslaunch aruco_ros single.launch markerId:=26 markerSize:=0.08 eye:="right"

the frame in which the pose is refered to can be chosen with the 'ref_frame' argument. The next example forces the marker pose to

be published with respect to the robot base_link frame:

roslaunch aruco_ros single.launch markerId:=26 markerSize:=0.08 eye:="right" ref_frame:=/base_link

- Visualize the result

rosrun image_view image_view image:=/aruco_single/result

CONTRIBUTING

|

aruco_ros repositoryrobot robotics aruco ros aruco-library fiducial-marker aruco-detector aruco-marker-detection aruco-ros fiducial-marker-detector |

|

|

|

aruco_ros repositoryrobot robotics aruco ros aruco-library fiducial-marker aruco-detector aruco-marker-detection aruco-ros fiducial-marker-detector aruco aruco_msgs aruco_ros |

|

|

Repository Summary

| Description | Software package and ROS wrappers of the Aruco Augmented Reality marker detector library |

| Checkout URI | https://github.com/pal-robotics/aruco_ros.git |

| VCS Type | git |

| VCS Version | humble-devel |

| Last Updated | 2025-04-10 |

| Dev Status | DEVELOPED |

| CI status | No Continuous Integration |

| Released | RELEASED |

| Tags | robot robotics aruco ros aruco-library fiducial-marker aruco-detector aruco-marker-detection aruco-ros fiducial-marker-detector |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Packages

| Name | Version |

|---|---|

| aruco | 5.0.5 |

| aruco_msgs | 5.0.5 |

| aruco_ros | 5.0.5 |

README

aruco_ros

Software package and ROS wrappers of the Aruco Augmented Reality marker detector library.

Features

-

High-framerate tracking of AR markers

-

Generate AR markers with given size and optimized for minimal perceptive ambiguity (when there are more markers to track)

-

Enhanced precision tracking by using boards of markers

-

ROS wrappers

Applications

- Object pose estimation

- Visual servoing: track object and hand at the same time

ROS API

Messages

-

aruco_ros/Marker.msg

Header header uint32 id geometry_msgs/PoseWithCovariance pose float64 confidence -

aruco_ros/MarkerArray.msg

Header header aruco_ros/Marker[] markers

Kinetic changes

-

Updated the Aruco library to version 3.0.4

-

Changed the coordinate system to match the library’s, the convention is shown in the image below, following rviz conventions, X is red, Y is green and Z is blue.

Test it with REEM

- Open a REEM in simulation with a marker floating in front of the robot. This will start the stereo cameras of the robot too. Since this is only a vision test, there is nothing else in this world apart from the robot and a marker floating in front of it. An extra light source had to be added to compensate for the default darkness.

roslaunch reem_gazebo reem_gazebo.launch world:=floating_marker

- Launch the

image_procnode to get undistorted images from the cameras of the robot.

ROS_NAMESPACE=/stereo/right rosrun image_proc image_proc image_raw:=image

- Start the

singlenode which will start tracking the specified marker and will publish its pose in the camera frame

roslaunch aruco_ros single.launch markerId:=26 markerSize:=0.08 eye:="right"

the frame in which the pose is refered to can be chosen with the 'ref_frame' argument. The next example forces the marker pose to

be published with respect to the robot base_link frame:

roslaunch aruco_ros single.launch markerId:=26 markerSize:=0.08 eye:="right" ref_frame:=/base_link

- Visualize the result

rosrun image_view image_view image:=/aruco_single/result

CONTRIBUTING

|

aruco_ros repositoryrobot robotics aruco ros aruco-library fiducial-marker aruco-detector aruco-marker-detection aruco-ros fiducial-marker-detector aruco aruco_msgs aruco_ros |

|

|

Repository Summary

| Description | Software package and ROS wrappers of the Aruco Augmented Reality marker detector library |

| Checkout URI | https://github.com/pal-robotics/aruco_ros.git |

| VCS Type | git |

| VCS Version | melodic-devel |

| Last Updated | 2023-09-21 |

| Dev Status | DEVELOPED |

| CI status | No Continuous Integration |

| Released | RELEASED |

| Tags | robot robotics aruco ros aruco-library fiducial-marker aruco-detector aruco-marker-detection aruco-ros fiducial-marker-detector |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Packages

| Name | Version |

|---|---|

| aruco | 2.2.3 |

| aruco_msgs | 2.2.3 |

| aruco_ros | 2.2.3 |

README

aruco_ros

Software package and ROS wrappers of the Aruco Augmented Reality marker detector library.

Features

-

High-framerate tracking of AR markers

-

Generate AR markers with given size and optimized for minimal perceptive ambiguity (when there are more markers to track)

-

Enhanced precision tracking by using boards of markers

-

ROS wrappers

Applications

- Object pose estimation

- Visual servoing: track object and hand at the same time

ROS API

Messages

-

aruco_ros/Marker.msg

Header header uint32 id geometry_msgs/PoseWithCovariance pose float64 confidence -

aruco_ros/MarkerArray.msg

Header header aruco_ros/Marker[] markers

Kinetic changes

-

Updated the Aruco library to version 3.0.4

-

Changed the coordinate system to match the library’s, the convention is shown in the image below, following rviz conventions, X is red, Y is green and Z is blue.

Test it with REEM

- Open a REEM in simulation with a marker floating in front of the robot. This will start the stereo cameras of the robot too. Since this is only a vision test, there is nothing else in this world apart from the robot and a marker floating in front of it. An extra light source had to be added to compensate for the default darkness.

roslaunch reem_gazebo reem_gazebo.launch world:=floating_marker

- Launch the

image_procnode to get undistorted images from the cameras of the robot.

ROS_NAMESPACE=/stereo/right rosrun image_proc image_proc image_raw:=image

- Start the

singlenode which will start tracking the specified marker and will publish its pose in the camera frame

roslaunch aruco_ros single.launch markerId:=26 markerSize:=0.08 eye:="right"

the frame in which the pose is refered to can be chosen with the 'ref_frame' argument. The next example forces the marker pose to

be published with respect to the robot base_link frame:

roslaunch aruco_ros single.launch markerId:=26 markerSize:=0.08 eye:="right" ref_frame:=/base_link

- Visualize the result

rosrun image_view image_view image:=/aruco_single/result