Repository Summary

| Description | A ROS4HRI-conformant node to track and extract 2D and 3D skeletons of humans from RGB/RGB-D cameras |

| Checkout URI | https://github.com/ros4hri/hri_fullbody.git |

| VCS Type | git |

| VCS Version | humble-devel |

| Last Updated | 2024-07-04 |

| Dev Status | UNMAINTAINED |

| CI status | No Continuous Integration |

| Released | UNRELEASED |

| Tags | No category tags. |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Packages

| Name | Version |

|---|---|

| hri_fullbody | 2.2.2 |

README

hri_fullbody

Overview

:warning: some of the links are yet to be updated and may be pointing to the original ROS page. As soon as all the involved components will be officially documented for ROS 2 as well, we will update this document.

hri_fullbody is a ROS4HRI-compatible

3D body pose estimation node.

It is built on top of Google Mediapipe 3D body pose estimation.

The node provides the 3D pose estimation for the detected humans in the scene, implementing a robust solution to self-occlusions.

This node can be used in two different modes: single body and multi-body.

In the former case, the node performs the whole body detection pipeline,

publishing information under the ROS4HRI naming convention regarding

the body id (on the /humans/bodies/tracked topic), the body bounding box,

and the jointstate of the body’s skeleton. In the latter, the node only

publishes jointstate information, expecting body detection to be performed

by another node and subscribing to /humans/bodies/tracked to read the ids

of the currently tracked bodies.

These two modes are required since Mediapipe body estimation

is not able to estimate more than one body pose per frame.

Therefore, in case we want more than one body pose estimated,

the adopted strategy is to rely on some other package implementing a human

body detection (and tracking in the best case) algorithm.

Such package should provide the hri_fullbody detect node the IDs of the

detected bodies and the relative images, cropped from the original image

following the detected bounding boxes.

To estimate the body position, the node does not need a RGB-D camera, only RGB is required. However, using RGB-D camera provides a more accurate depth estimation.

Important: to estimate the body depth without using a depth sensor, a calibrated RGB camera is required. You can follow this tutorial to properly calibrate your camera.

Launch

ros2 launch hri_fullbody hri_fullbody_with_args.launch.py <parameters>

ROS API

Parameters

Node parameters:

-

single_body(default:True): whether or not running in single body mode (see above for the single body vs multi-body modes). -

use_depth(default:False): whether or not to rely on depth images for estimating body movement in the scene. When this isFalse, the node estimates the body position in the scene solving a P6P problem for the face and approximating the position from this, using pinhole camera model geometry. -

stickman_debug(default:False): whether or not to publish frames representing the body skeleton directly using the raw results from mediapipe 3D body pose estimation. These debug frames are not oriented to align with the body links (ie, only the 3D location of the frame is useful). -

human_description_<body_id>(read-only): the URDF generated forbody_<body_id>. The node generates a URDF for each detected body and uses them to perform kinematically-consistent 3D body pose estimation.

hri_fullbody.launch parameters:

-

single_body(default:True): equivalent tosingle_bodynode parameter. -

use_depth(default:False): equivalent touse_depthnode parameter. -

stickman_debug(default:False): equivalent tostickman_debugnode parameter. -

rgb_camera(default: ` `): rgb camera topics namespace. -

rgb_camera_topic(default:$(arg rgb_camera)/image_raw): rgb camera raw image topic. -

rgb_camera_info(default:$(arg rgb_camera)/camera_info): rgb camera info topic. -

depth_camera(default: ` `): depth camera topics namespace. -

depth_camera_topic(default:$(arg depth_camera)/image_rect_raw): depth camera rectified raw image topic. -

depth_camera_info(default:$(arg depth_camera)/camera_info): depth camera info topic.

Topics

hri_fullbody follows the ROS4HRI conventions (REP-155). In particular,

refer to the REP to know the list and position of the 2D/3D skeleton

points published by the node.

Subscribed topics

-

/camera_info(sensor_msgs/CameraInfo): rgb camera meta information -

/depth_image(sensor_msgs/Image): depth image used to estimate the 3D body position with respect to the camera. -

/depth_info(sensor_msgs/CameraInfo): depth camera meta information

Single body mode only:

-

/image(sensor_msgs/Image): rgb image, processed for body detection and 3D body pose estimation.

Multi-body mode only:

-

/humans/bodies/tracked(hri_msgs/IdsList): list of the bodies currently detected. -

/humans/bodies/<body_id>/cropped(sensor_msgs/Image): image used to estimate the 3D body pose. -

/humans/bodies/<body_id>/roi(hri_msgs/NormalizedRegionOfInterest2D): body bounding box in normalized image coordinates.

Published topics

-

/humans/bodies/<body_id>/skeleton2d(hri_msgs/Skeleton2D): detected 2D skeleton points. -

/humans/bodies/<body_id>/joint_states(sensor_msgs/JointState): skeleton joints state. -

/humans/bodies/<body_id>/position: (geometry_msgs/PointStamped): filtered body position, representing the point between the hips of the tracked body. Only published whenuse_depth = True. -

/humans/bodies/<body_id>/velocity: (geometry_msgs/TwistStamped): filtered body velocity. Only published whenuse_depth = True.

Single body mode only:

-

/humans/bodies/tracked(hri_msgs/IdsList): list of the bodies currently detected. There will be only one body in the list. -

/humans/bodies/<body_id>/roi(hri_msgs/NormalizedRegionOfInterest2D): body bounding box in normalized image coordinates.

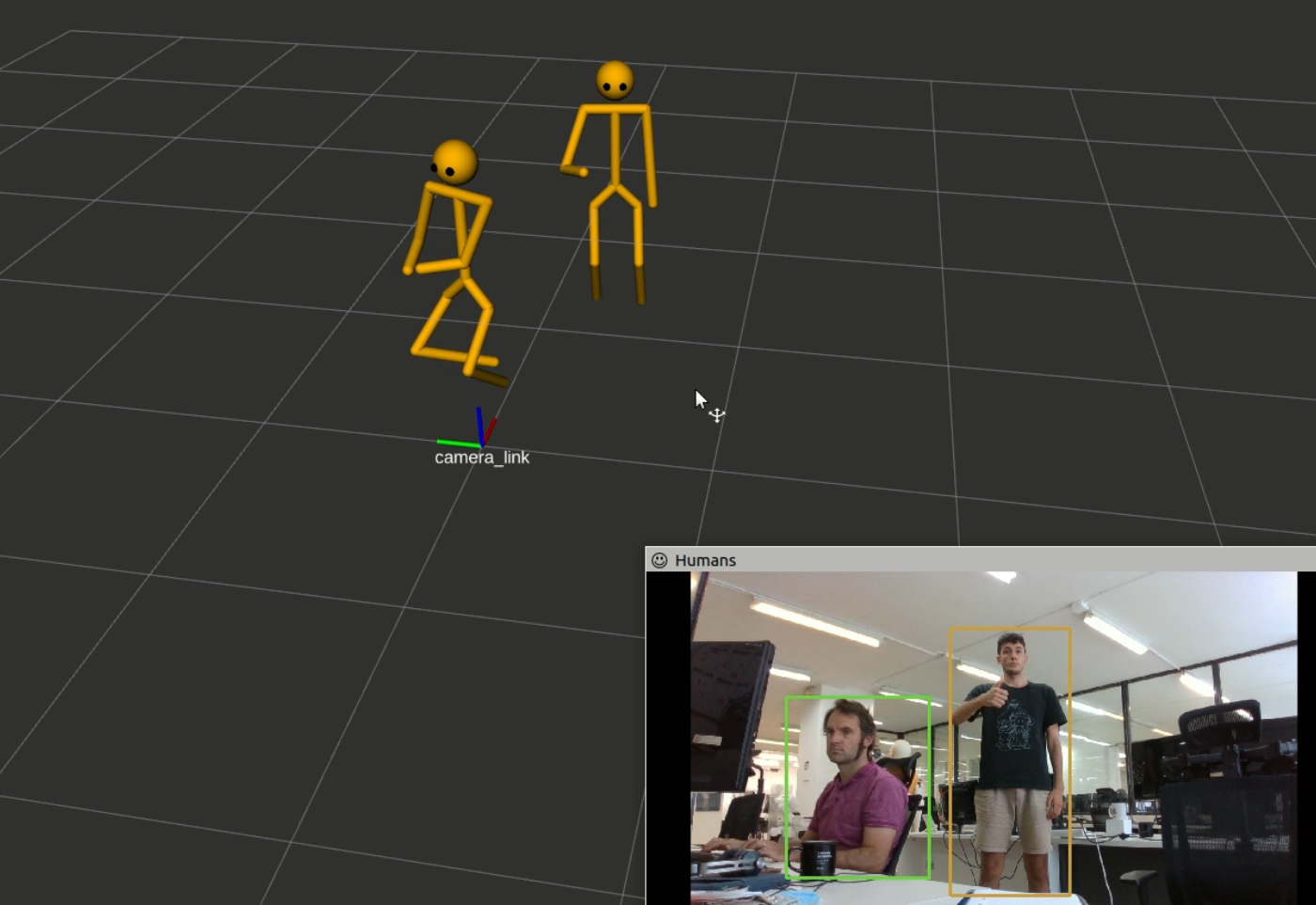

Visualization

It is possible to visualize the results of the body pose estimation in rviz using the hri_rviz Skeleton plugin. A visualization example is reported in the image above.

CONTRIBUTING

Contributing Guidelines

Thank you for your interest in contributing to this project Whether it’s a bug report, new feature, correction, or additional documentation, we greatly value feedback and contributions from our community.

Please read through this document before submitting any issues or pull requests to ensure we have all the necessary information to effectively respond to your bug report or contribution.

Reporting Bugs/Feature Requests

We welcome you to use the GitHub issue tracker to report bugs or suggest features.

When filing an issue, please check existing open issues, or recently closed, issues to make sure somebody else hasn’t already reported the issue. Please try to include as much information as you can. Details like these are incredibly useful:

- A reproducible test case or series of steps

- The version of our code being used

- Any modifications you’ve made relevant to the bug

- Anything unusual about your environment or deployment

Contributing via Pull Requests

Contributions via pull requests are much appreciated. Before sending us a pull request, please ensure that:

- You are working against the latest source on the master branch.

- You check existing open, and recently merged, pull requests to make sure someone else hasn’t addressed the problem already.

- You open an issue to discuss any significant work - we would hate for your time to be wasted.

To send us a pull request, please:

- Fork the repository.

- Modify the source; please focus on the specific change you are contributing. If you also reformat all the code, it will be hard for us to focus on your change.

- Ensure local tests pass. (

colcon test) - Commit to your fork using clear commit messages.

- Send a pull request, answering any default questions in the pull request interface.

- Pay attention to any automated CI failures reported in the pull request, and stay involved in the conversation.

GitHub provides additional documentation on forking a repository and creating a pull request.

Licensing

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise,

any Contribution intentionally submitted for inclusion in the Work

by You to the Licensor shall be under the terms and conditions of

this License, without any additional terms or conditions.

Notwithstanding the above, nothing herein shall supersede or modify

the terms of any separate license agreement you may have executed

with Licensor regarding such Contributions.