|

isaac_ros_pose_estimation repositorydeep-learning gpu dope inference ros nvidia pose-estimation jetson ros2 tensorrt ros2-humble |

|

|

|

isaac_ros_pose_estimation repositorydeep-learning gpu dope inference ros nvidia pose-estimation jetson ros2 tensorrt ros2-humble |

|

|

|

isaac_ros_pose_estimation repositorydeep-learning gpu dope inference ros nvidia pose-estimation jetson ros2 tensorrt ros2-humble |

|

|

|

isaac_ros_pose_estimation repositorydeep-learning gpu dope inference ros nvidia pose-estimation jetson ros2 tensorrt ros2-humble |

|

|

|

isaac_ros_pose_estimation repositorydeep-learning gpu dope inference ros nvidia pose-estimation jetson ros2 tensorrt ros2-humble isaac_ros_centerpose isaac_ros_dope isaac_ros_foundationpose gxf_isaac_centerpose gxf_isaac_dope gxf_isaac_foundationpose isaac_ros_pose_proc |

|

|

Repository Summary

| Description | Deep learned, NVIDIA-accelerated 3D object pose estimation |

| Checkout URI | https://github.com/nvidia-isaac-ros/isaac_ros_pose_estimation.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-02-28 |

| Dev Status | UNMAINTAINED |

| CI status | No Continuous Integration |

| Released | UNRELEASED |

| Tags | deep-learning gpu dope inference ros nvidia pose-estimation jetson ros2 tensorrt ros2-humble |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Packages

| Name | Version |

|---|---|

| isaac_ros_centerpose | 3.2.5 |

| isaac_ros_dope | 3.2.5 |

| isaac_ros_foundationpose | 3.2.5 |

| gxf_isaac_centerpose | 3.2.5 |

| gxf_isaac_dope | 3.2.5 |

| gxf_isaac_foundationpose | 3.2.5 |

| isaac_ros_pose_proc | 3.2.5 |

README

Isaac ROS Pose Estimation

Deep learned, NVIDIA-accelerated 3D object pose estimation

Overview

Isaac ROS Pose Estimation contains three ROS 2 packages to predict the pose of an object. Please refer the following table to see the differences of them:

| Node | Novel Object wo/ Retraining | TAO Support | Speed | Quality | Maturity |

|---|---|---|---|---|---|

isaac_ros_foundationpose |

✓ | N/A | Fast | Best | New |

isaac_ros_dope |

x | x | Fastest | Good | Time-tested |

isaac_ros_centerpose |

x | ✓ | Faster | Better | Established |

Those packages use GPU acceleration for DNN inference to estimate the pose of an object. The output prediction can be used by perception functions when fusing with the corresponding depth to provide the 3D pose of an object and distance for navigation or manipulation.

isaac_ros_foundationpose is used in a graph of nodes to estimate the pose of

a novel object using 3D bounding cuboid dimensions. It’s developed on top of

FoundationPose model, which is

a pre-trained deep learning model developed by NVLabs. FoundationPose

is capable for both pose estimation and tracking on unseen objects without requiring fine-tuning,

and its accuracy outperforms existing state-of-art methods.

FoundationPose comprises two distinct models: the refine model and the score model. The refine model processes initial pose hypotheses, iteratively refining them, then passes these refined hypotheses to the score model, which selects and finalizes the pose estimation. Additionally, the refine model can serve for tracking, that updates the pose estimation based on new image inputs and the previous frame’s pose estimate. This tracking process is more efficient compared to pose estimation, which speeds exceeding 120 FPS on the Jetson Orin platform.

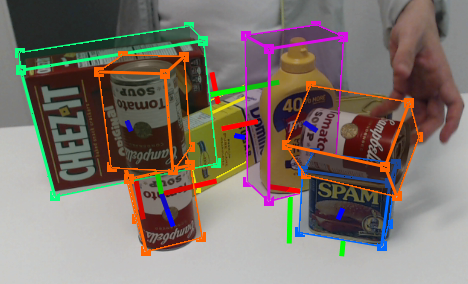

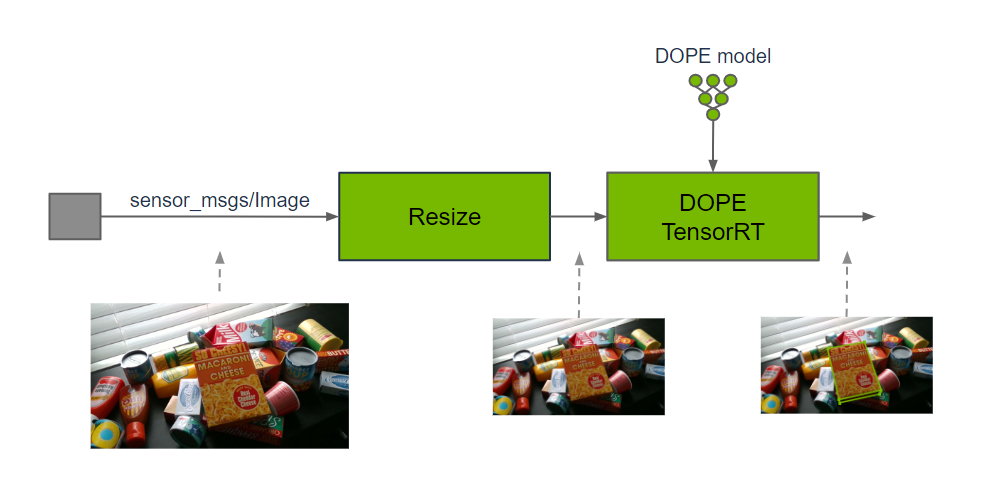

isaac_ros_dope is used in a graph of nodes to estimate the pose of a

known object with 3D bounding cuboid dimensions. To produce the

estimate, a DOPE (Deep

Object Pose Estimation) pre-trained model is required. Input images may

need to be cropped and resized to maintain the aspect ratio and match

the input resolution of DOPE. After DNN inference has produced an estimate, the

DNN decoder will use the specified object type, along with the belief

maps produced by model inference, to output object poses.

NVLabs has provided a DOPE pre-trained model using the

HOPE dataset. HOPE stands

for Household Objects for Pose Estimation. HOPE is a research-oriented

dataset that uses toy grocery objects and 3D textured meshes of the objects

for training on synthetic data. To use DOPE for other objects that are

relevant to your application, the model needs to be trained with another

dataset targeting these objects. For example, DOPE has been trained to

detect dollies for use with a mobile robot that navigates under, lifts,

and moves that type of dolly. To train your own DOPE model, please refer to the

Training your Own DOPE Model Tutorial.

isaac_ros_centerpose has similarities to isaac_ros_dope in that

both estimate an object pose; however, isaac_ros_centerpose provides

additional functionality. The

CenterPose DNN performs

object detection on the image, generates 2D keypoints for the object,

estimates the 6-DoF pose up to a scale, and regresses relative 3D bounding cuboid

dimensions. This is performed on a known object class without knowing

the instance-for example, a CenterPose model can detect a chair without having trained on

images of that specific chair.

Pose estimation is a compute-intensive task and therefore not performed at the frame rate of an input camera. To make efficient use of resources, object pose is estimated for a single frame and used as an input to navigation. Additional object pose estimates are computed to further refine navigation in progress at a lower frequency than the input rate of a typical camera.

Packages in this repository rely on accelerated DNN model inference

using Triton or

TensorRT from Isaac ROS DNN Inference.

For preprocessing, packages in this rely on the Isaac ROS DNN Image Encoder,

which can also be found at Isaac ROS DNN Inference.

Performance

| Sample Graph |

Input Size |

AGX Orin |

Orin NX |

Orin Nano Super 8GB |

x86_64 w/ RTX 4090 |

|---|---|---|---|---|---|

|

FoundationPose Pose Estimation Node |

720p |

1.54 fps 780 ms @ 30Hz |

– |

– |

9.56 fps 110 ms @ 30Hz |

|

DOPE Pose Estimation Graph |

VGA |

27.3 fps 54 ms @ 30Hz |

15.2 fps 73 ms @ 30Hz |

– |

186 fps 12 ms @ 30Hz |

|

Centerpose Pose Estimation Graph |

VGA |

44.8 fps 43 ms @ 30Hz |

29.0 fps 51 ms @ 30Hz |

29.8 fps 50 ms @ 30Hz |

50.2 fps 14 ms @ 30Hz |

Documentation

Please visit the Isaac ROS Documentation to learn how to use this repository.

Packages

Latest

Update 2024-12-10: Added pose estimate post-processing utilities

CONTRIBUTING

Isaac ROS Contribution Rules

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise, any Contribution intentionally submitted for inclusion in the Work by You to the Licensor shall be under the terms and conditions of this License, without any additional terms or conditions. Notwithstanding the above, nothing herein shall supersede or modify the terms of any separate license agreement you may have executed with Licensor regarding such Contributions.

Contributors must sign-off each commit by adding a Signed-off-by: ...

line to commit messages to certify that they have the right to submit

the code they are contributing to the project according to the

Developer Certificate of Origin (DCO).

|

isaac_ros_pose_estimation repositorydeep-learning gpu dope inference ros nvidia pose-estimation jetson ros2 tensorrt ros2-humble |

|

|

|

isaac_ros_pose_estimation repositorydeep-learning gpu dope inference ros nvidia pose-estimation jetson ros2 tensorrt ros2-humble |

|

|

|

isaac_ros_pose_estimation repositorydeep-learning gpu dope inference ros nvidia pose-estimation jetson ros2 tensorrt ros2-humble |

|

|

|

isaac_ros_pose_estimation repositorydeep-learning gpu dope inference ros nvidia pose-estimation jetson ros2 tensorrt ros2-humble |

|

|