Repository Summary

| Description | [IROS 2024] Touch-GS: Visual-Tactile Supervised 3D Gaussian Splatting |

| Checkout URI | https://github.com/armlabstanford/touch-gs.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2024-12-04 |

| Dev Status | UNMAINTAINED |

| CI status | No Continuous Integration |

| Released | UNRELEASED |

| Tags | No category tags. |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Packages

| Name | Version |

|---|---|

| capturedata | 0.1.1 |

| dtv2_tactile_camera | 0.1.1 |

| vtnf_camera | 0.0.0 |

README

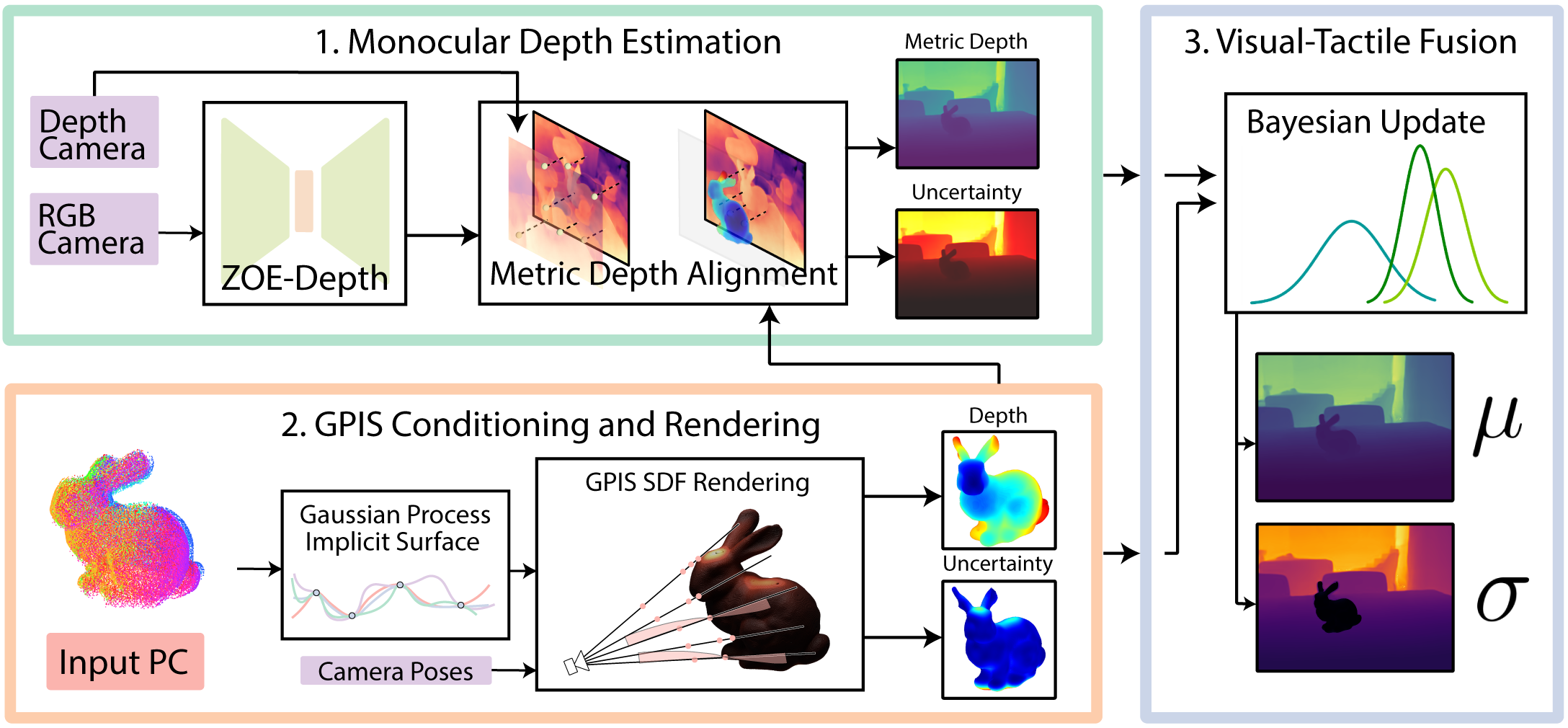

🐇 Touch-GS: Visual-Tactile Supervised 3D Gaussian Splatting

Aiden Swann](https://aidenswann.com/), [Matthew Strong, Won Kyung Do, Gadiel Sznaier Camps, Mac Schwager, Monroe Kennedy III

International Conference on Intelligent Robots and Systems (IROS) 2024 Oral!

This repo houses code and data for our work in Touch-GS.

Quick Start and Setup

The pipeline has been tested on Ubuntu 22.04.

Requirements:

- CUDA 11+

- Python 3.8+

- Conda or Mamba (optional)

Dependencies (from Nerfstudio)

Install PyTorch with CUDA (this repo has been tested with CUDA 11.7 and CUDA 11.8) and tiny-cuda-nn.

cuda-toolkit is required for building tiny-cuda-nn.

For CUDA 11.8:

conda create --name touch-gs python=3.8

conda activate touch-gs

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 --index-url https://download.pytorch.org/whl/cu118

conda install -c "nvidia/label/cuda-11.8.0" cuda-toolkit

pip install ninja git+https://github.com/NVlabs/tiny-cuda-nn/#subdirectory=bindings/torch

See Dependencies in the Installation documentation for more.

Repo Cloning To clone the repo, since we have submodules, run the following command:

git clone --recurse-submodules https://github.com/armlabstanford/Touch-GS

Installing the GPIS code

We have implemented our own GPIS (Gaussian Process Implicit Surface) from scratch here!

Please follow the steps to install the repo there.

Install Our Version of Nerfstudio

You can find more detailed instructions in Nerfstudio’s README.

# install nerfstudio

bash install_ns.sh

Getting Touch-GS Data Setup and Training

We have made an end-to-end pipeline that will take care of setting up the data, training, and evaluating our method. Note that we will release the code for running the ablations (which includes the baselines) soon!

To prepare each scene:

- Install Python packages

pip install -r requirements.txt

- Real Bunny Scene (our method)

bash scripts/train_bunny_real.sh

- Mirror Scene

bash scripts/train_mirror_data.sh

- Prism Scene

bash scripts/train_block_data.sh

- Bunny Blender Scene (processing to a unified format in progress)

Get Renders

The renders on the test set are created in the main script under exp-name-renders within touch-gs-data.

Get Rendered Video

You can get rendered videos with a custom camera path detailed here. This is how we were able to get our videos on our website.