|

dnn_node package from hobot_dnn repodnn_benchmark_example dnn_node dnn_node_example dnn_node_sample |

Package Summary

| Tags | No category tags. |

| Version | 2.4.2 |

| License | Apache License 2.0 |

| Build type | AMENT_CMAKE |

| Use | RECOMMENDED |

Repository Summary

| Description | |

| Checkout URI | https://github.com/d-robotics/hobot_dnn.git |

| VCS Type | git |

| VCS Version | develop |

| Last Updated | 2024-12-16 |

| Dev Status | UNKNOWN |

| CI status | No Continuous Integration |

| Released | UNRELEASED |

| Tags | No category tags. |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Package Description

Additional Links

Maintainers

- zhukao

Authors

| English | 简体中文 |

Getting Started with DNN Node

Introduction

By reading this document, users can utilize models and image data on the RDK X3/X5 to perform model inference using the BPU processor and process the parsed model outputs.

The DNN Node package is part of the robot development platform, based on the libdnn and ROS2 Node for secondary development, providing a simpler and more user-friendly model integration development interface for application development. This includes functions such as model management, input processing and result parsing based on model descriptions, and model output memory allocation management.

The DnnNode in the Dnn Node package is a virtual base class that defines the data structures and interfaces for model integration development. Users need to inherit the DnnNode class and implement pre- and post-processing as well as configuration interfaces.

Development Environment

- Programming Language: C/C++

- Development Platform: X3/Ultra/X5/X86

- System Version: Ubuntu 20.04/Ubuntu 22.04

- Compilation Toolchain: Linux GCC 9.3.0/Linaro GCC 11.4.0

Compilation

-

X3: Supports compilation on X3 Ubuntu system and cross-compilation using Docker on PC.

-

Ultra: Supports compilation on Ultra Ubuntu system and cross-compilation using Docker on PC.

-

X5: Supports compilation on X5 Ubuntu system and cross-compilation using Docker on PC.

-

X86: Supports compilation on X86 Ubuntu system.

Compilation options can control the dependencies and functionalities of compiling the package.

Dependency Libraries

X3

- dnn: 1.18.4

- opencv: 3.4.5

Ultra

- dnn: 1.17.3

- opencv: 3.4.5

X5

- dnn: 1.23.5

- opencv: 3.4.5

X86

- dnn: 1.12.3

- opencv: 3.4.5

Compilation on X3/Ultra/X5 Ubuntu System

- Compilation Environment Confirmation

-

The current compilation terminal has set the TROS environment variable:

source /opt/tros/setup.bash. -

The ROS2 software package build system

ament_cmakehas been installed. Installation command:apt update; apt-get install python3-catkin-pkg; pip3 install empy. -

The ROS2 compilation tool

colconhas been installed. Installation command:pip3 install -U colcon-common-extensions.

- Compilation

- Compile the

dnn_nodepackage:colcon build --packages-select dnn_node

Cross-Compilation for X3/Ultra/X5 using Docker

- Compilation Environment Confirmation

- Compile in Docker, where TROS has been pre-compiled in Docker. For Docker installation, cross-compilation instructions, TROS compilation, and deployment, please refer to D-Robotics Robot Platform User Manual.

- Compilation

- Compile the

dnn_nodepackage:

# RDK X3

bash robot_dev_config/build.sh -p X3 -s dnn_node

# RDK Ultra

bash robot_dev_config/build.sh -p Rdkultra -s dnn_node

# RDK X5

bash robot_dev_config/build.sh -p X5 -s dnn_node

Compilation on X86 Ubuntu System for X86

- Compilation Environment Confirmation

X86 Ubuntu version: ubuntu20.04

- Compilation

- Compilation command:

colcon build --packages-select dnn_node \

--merge-install \

--cmake-force-configure \

--cmake-args \

--no-warn-unused-cli \

-DPLATFORM_X86=ON \

-DTHIRD_PARTY=`pwd`/../sysroot_docker

Usage Guide

Users need to inherit the DnnNode virtual base class and implement virtual interfaces such as configuration.

For data and interface descriptions in the dnn node package, please refer to the API manual: docs/API-Manual/API-Manual.md

Workflow

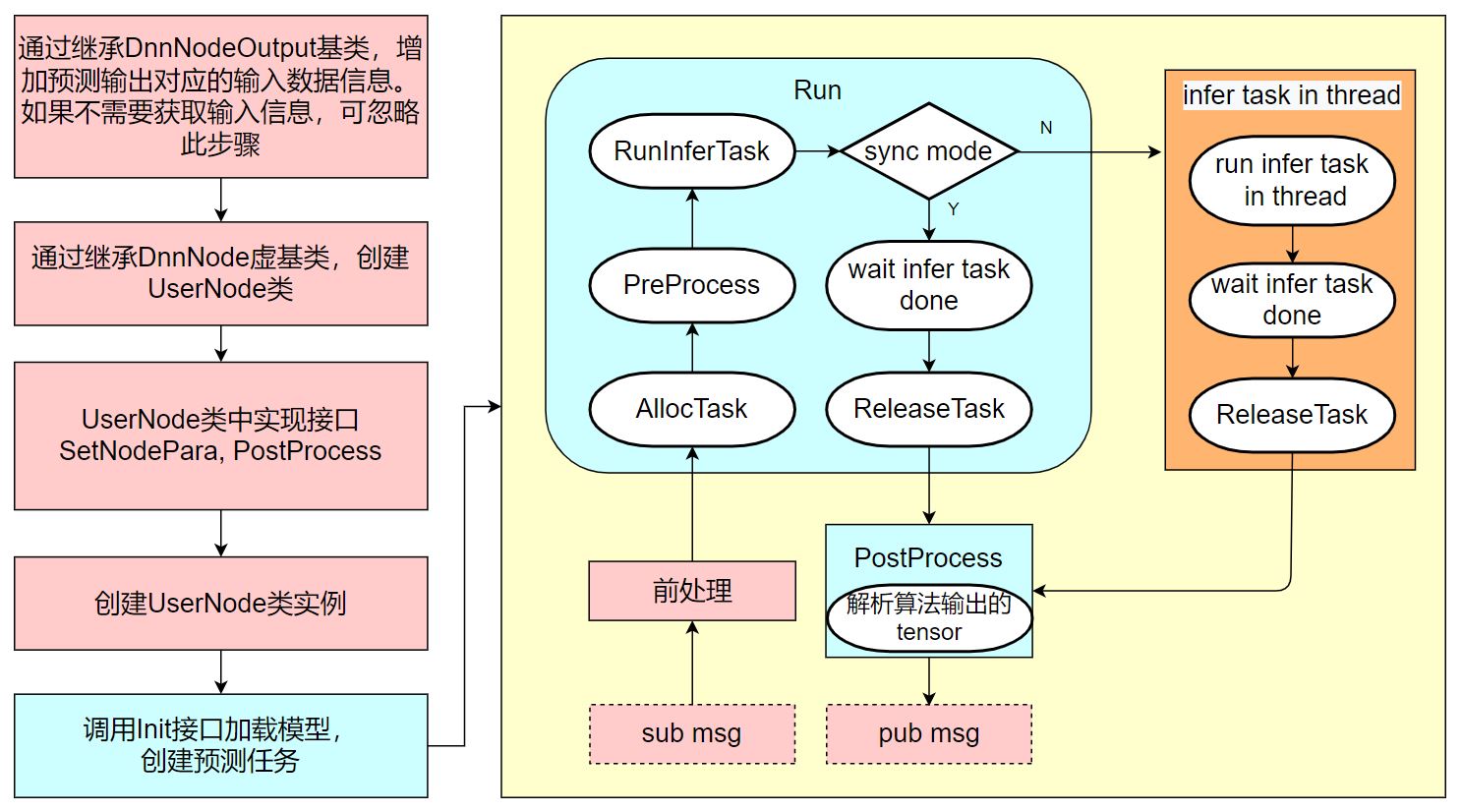

There are two processes involved in usage, namely the initialization process and the runtime process.

The initialization process involves inheriting the dnn node base class, creating a user node, implementing the virtual interfaces SetNodePara and PostProcess, and using the base class’s Init interface to complete the initialization operation.

The runtime process involves performing inference and business logic. Taking an algorithm that infers using image data as an example, the process involves subscribing to image messages (sub msg), processing the image into the algorithm’s input data type (preprocessing), using the base class’s Run interface for algorithm inference; after the inference is completed, the PostProcess interface callback outputs the tensor data from the algorithm, parsing the tensor data and publishing structured AI data.

How to Use

Select the model_task_type for algorithm inference

When configuring the model management and inference parameters dnn_node_para_ptr_ using the SetNodePara interface, specify the model_task_type used for algorithm inference. The dnn node automatically creates a corresponding type of task based on the configured type.

Task types include ModelInferType and ModelRoiInferType, with the default being ModelInferType.

Use the ModelRoiInferType task type when the algorithm input contains roi (Region of Interest), such as in the case of hand keypoint detection algorithms where the input is an image and the roi coordinates of the hands in the image.

For other cases, select the ModelInferType type. For example, algorithms that only take images as input such as YOLO, FCOS, and mobilenetv2 for detection and classification.

Set the number of inference tasks

A model supports multiple tasks to be executed, enabling parallel inference of multiple frames to improve BPU utilization and algorithm inference output frame rate. During the dnn node initialization phase (when calling the Init interface), inference tasks are created in advance based on the number of tasks configured by the user.

When configuring the model management and inference parameters dnn_node_para_ptr_ using the SetNodePara interface, specify the number of algorithm inference tasks as task_num. The default number of inference tasks is set to 2, and if the algorithm inference takes a long time (resulting in a lower frame rate for the output), more tasks need to be specified for inference.

Prepare algorithm input data (preprocessing)

Preprocessing involves preparing the data into the data type required for algorithm input.

For algorithms that take images as input, the dnn node provides the hobot::dnn_node::ImageProc::GetNV12PyramidFromNV12Img interface to generate NV12PyramidInput type data from nv12 encoded image data for algorithm inference.

Parse the tensor output from the algorithm model

After the inference is completed, the PostProcess interface callbacks the output tensor data from the algorithm. Users need to parse the tensor data for structured AI data.

For example, for detection algorithms, a custom model output parsing method would be as follows:

// Define the data type for algorithm output

struct DetResult {

float xmin;

float ymin;

float xmax;

float ymax;

float score;

};

// Custom algorithm output parsing method

// - Parameters

// - [in] node_output The DnnNodeOutput containing the algorithm inference output

// - [in/out] results Parsed inference results

// - Return Value

// - 0 Success

// - -1 Failure

int32_t Parse(const std::shared_ptr<hobot::dnn_node::DnnNodeOutput> &node_output,

std::vector<std::shared_ptr<DetResult>> &results);

Defined the data type DetResult for algorithm output and the algorithm output parsing method Parse, which parses the model output in node_output (containing std::vector<std::shared_ptr<DNNTensor>> output_tensors) and stores the structured AI data in results.

In addition, the dnn node built-in various detection, classification, and segmentation algorithm model output parsing methods, please refer to the FAQ for details.

FAQ

- How to obtain the algorithm inference input and output frame rates, inference time, etc.?

The DnnNodeOutput type data output after inference contains the inference statistics in rt_stat.

Among them, infer_time_ms represents the inference time for the current frame. The input and output frame rates are calculated on a per-second basis. When fps_updated is true, it means that the frame rate statistics have been updated for the current frame.

- Do the resolution of the subscription images need to be consistent with the algorithm input resolution?

It is not mandatory.

During the preparation of algorithm input data (pre-processing) stage, the dnn node provides the hobot::dnn_node::ImageProc::GetNV12PyramidFromNV12Img interface to generate NV12PyramidInput type data from nv12 encoded format image data, which is used for algorithm input inference.

When processing images, if the input image resolution is smaller than the model input resolution, the input image is padded to the top-left area; if the input image resolution is larger than the model input resolution, the input image will be cropped to the top-left area.

Also, hobotcv can be used for resizing images, resize the input image to the algorithm input resolution to retain all the image information. Refer to the dnn_node_sample for the usage.

- Using algorithm input information in the algorithm output parsing method and post-processing PostProcess

The input parameter type for PostProcess is hobot::dnn_node::DnnNodeOutput. Users can inherit the DnnNodeOutput data type and add the required data to use.

For example, if PostProcess requires a parameter of type uint64_t and the corresponding parameter for each frame inference input is different, it can be extended as follows:

struct SampleOutput : public DnnNodeOutput {

uint64_t para;

};

Set the value of para in PreProcess and use it in PostProcess.

- Match Algorithm Output with Input

The input parameter type std::shared_ptr<std_msgs::msg::Header> msg_header in the input parameters of PostProcess in hobot::dnn_node::DnnNodeOutput is filled with the header of the image message subscribed in PreProcess, which can be used to match the corresponding input.

- Synchronous and Asynchronous Inference

dnn node supports two types of inference modes: synchronous and asynchronous. When calling the Run interface for inference, specify the is_sync_mode parameter to set the mode, with the default being the more efficient asynchronous mode.

Synchronous inference: When calling the Run interface for inference, the interface internally blocks until the inference is completed and the PostProcess callback interface finishes processing the model output.

Asynchronous inference: When calling the Run interface for prediction, the interface internally asynchronously uses a thread pool to process the prediction. After the prediction task is sent to the thread pool, it returns directly without waiting for the prediction to finish. When the prediction result is returned (either when the prediction is completed or when there is an error), the task is released using the ReleaseTask interface inside the dnn node, and the parsed model output is returned through the PostProcess interface.

Asynchronous inference mode can fully utilize BPU, improve the algorithm’s inference output frame rate, but it cannot guarantee the consistency of algorithm output order with input order. For scenarios that have requirements on output sequences, it is necessary to determine whether the algorithm output needs to be sorted.

Unless there are specific requirements, it is recommended to use the default more efficient asynchronous mode for algorithm inference.

- Using the Built-in Model Output Parsing Methods in dnn node

dnn node comes with various model output parsing methods for detection, classification, and segmentation algorithms. After installing TROS on X3, the supported parsing methods are as follows:

root@ubuntu:~# tree /opt/tros/include/dnn_node/util/output_parser

/opt/tros/include/dnn_node/util/output_parser

├── classification

│ └── ptq_classification_output_parser.h

├── detection

│ ├── fasterrcnn_output_parser.h

│ ├── fcos_output_parser.h

│ ├── nms.h

│ ├── ptq_efficientdet_output_parser.h

│ ├── ptq_ssd_output_parser.h

│ ├── ptq_yolo2_output_parser.h

│ ├── ptq_yolo3_darknet_output_parser.h

│ └── ptq_yolo5_output_parser.h

├── perception_common.h

├── segmentation

│ └── ptq_unet_output_parser.h

└── utils.h

3 directories, 12 files

You can see that there are three paths classification, detection, and segmentation under the /opt/tros/include/dnn_node/util/output_parser directory, corresponding to the output parsing methods of classification, detection, and segmentation algorithms.

perception_common.h defines the parsed perception result data type.

The algorithm models and their corresponding output parsing methods are as follows:

| Algorithm Category | Algorithm | Output Parsing Method |

|---|---|---|

| Object Detection | FCOS | fcos_output_parser.h |

| Object Detection | EfficientNet_Det | ptq_efficientdet_output_parser.h |

| Object Detection | MobileNet_SSD | ptq_ssd_output_parser.h |

| Object Detection | YoloV2 | ptq_yolo2_output_parser.h |

| Object Detection | YoloV3 | ptq_yolo3_darknet_output_parser.h |

| Object Detection | YoloV5 | ptq_yolo5_output_parser.h |

| Human Detection | FasterRcnn | fasterrcnn_output_parser.h |

| Image Classification | mobilenetv2 | ptq_classification_output_parser.h |

| Semantic Segmentation | mobilenet_unet | ptq_unet_output_parser.h |

In the inference result callback PostProcess(const std::shared_ptr<hobot::dnn_node::DnnNodeOutput> &node_output), using the built-in parsing method in hobot_dnn to parse the output of the YoloV5 algorithm is shown in the following example:

// 1 Create parsed output data, DnnParserResult is the algorithm output data type corresponding to the built-in parsing method in hobot_dnn

std::shared_ptr<DnnParserResult> det_result = nullptr;

// 2 Start the parsing

if (hobot::dnn_node::parser_yolov5::Parse(node_output, det_result) < 0) {

RCLCPP_ERROR(rclcpp::get_logger("dnn_node_sample"),

"Parse output_tensors fail!");

return -1;

}

// 3 Use the parsed algorithm result det_result

Changelog for package dnn_node

tros_2.4.2 (2024-12-09)

- 修复yolov8-seg模型后处理中box越界导致的crash问题。

tros_2.4.1 (2024-11-18)

- 修复多线程推理中推理耗时计算错误的问题。

- 依赖的OpenCV版本从3.4.5升级到4.5。

- 新增

stdc分割模型后处理。

tros_2.4.0rc1 (2024-09-04)

- 新增推理超出线程池错误码返回。

tros_2.4.0 (2024-07-15)

- 适配x5平台, 其中dnn版本号1.23.5。

- 更新YOLOv8和YOLOv10模型后处理。

- 修复了YOLOv5中多线程处理每一层后只输出一个框的bug。

- 更新YOLOv8Seg模型后处理。

- 修复了SSD中未反量化处理导致的结果错误的bug。

tros_2.3.0rc2 (2024-05-20)

- 修复dnn_node模型输出Tensor内存申请异常的问题。

tros_2.3.0 (2024-03-27)

- 新增中英双语Readme。

- 新增Model类用于管理模型生命周期。

- 新增Task类用于管理推理任务生命周期。

- 简化模型后处理框架,统一直接使用模型推理输出Tensor解析。

- ImageProc新增多种读取数据转为DNNInput、DNNTensor。

- 使用sync加速yolo5后处理tensor解析的速度。

tros_2.2.2 (2023-12-22)

tros_2.1.1 (2023-07-14)

- 规范Rdkultra产品名。

tros_2.1.0 (2023-07-07)

- 适配RDK Ultra平台,其中easydnn版本号1.6.1,dnn版本号1.19.3(和v1.1.57_release版本OE对齐),hlog版本号1.6.1。

tros_2.0.1 (2023-06-07)

- README.md中更新依赖的easydnn版本说明为1.6.1,dnn版本为1.18.4。

tros_2.0.0rc1 (2023-05-23)

- 修复MobileNet_SSD可能出现崩溃问题

tros_2.0.0 (2023-05-11)

- 更新package.xml,支持应用独立打包

tros_1.1.7 (2023-4-12)

- 修复X86平台上DDR模型推理失败问题。

tros_1.1.6b (2023-3-03)

- 修复dnn_node动态库安装时libhlog.so.1名错误问题。

tros_1.1.6a (2023-2-16)

- 适配x86版本的dnn node,新增x86版本编译选项。

tros_1.1.4 (2022-12-23)

- 修复部分内置算法后处理中LoadConfig无返回值的问题。

tros_1.1.3 (2022-11-09)

- 修复GetNV12PyramidFromNV12Img接口申请内存未初始化导致的图片padding乱码问题。

tros_1.1.2rc2 (2022-10-26)

- 修复内置的MobileNet_SSD目标检测算法输出解析方法使用参数错误的问题。

tros_1.1.2rc1 (2022-10-17)

- 重构内置的算法输出解析方法。

- 自适应支持多核模型推理。

- 支持由用户配置内置的yolov2、yolov3、yolov5、fcos算法解析方法参数。

tros_1.1.2 (2022-09-26)

- Node输出数据类型DnnNodeOutput增加消息头和模型输出tensor成员,支持用户直接解析tensor。

- 默认推理模式由同步模式变更为效率更高的异步模式。

- 当模型文件中只包含一个模型时,DnnNodePara配置中可以不指定model_name模型名配置项。

- 算法推理任务支持指定BPU核,默认使用负载均衡模式。

hhp_1.0.4 (2022-07-29)

- 模型推理预测库dnn升级到1.9.6c版本,修复偶现算法推理结果输出异常的问题,以及加密芯片访问报错的问题。

- easydnn预测库适配dnn,同步升级到0.4.11版本,内置的模型后处理从easydnn中迁移到dnn_node。

- 更新FCOS模型后处理,和OpenExplorer模型版本对齐。

v1.0.1 (2022-06-23)

- 优化异步推理流程,提升推理输出帧率。

- 输出的推理结果中增加性能统计数据,包括推理和解析模型输出统计(开始和结束时间点,以及耗时)、推理输入帧率和推理成功的输出帧率,用于模型推理的性能分析。

- 优化预置的mobilenet_unet模型后处理,降低后处理耗时和算法输出数据带宽。

Wiki Tutorials

Package Dependencies

| Deps | Name |

|---|---|

| ament_cmake | |

| ament_lint_auto | |

| ament_lint_common | |

| rclcpp | |

| std_msgs | |

| ament_cmake_gtest | |

| hobot-dnn |