|

isaac_ros_dnn_inference repositoryai deep-learning gpu dnn ros nvidia triton deeplearning tao jetson ros2 tensorrt triton-inference-server tensorrt-inference ros2-humble |

|

|

|

isaac_ros_dnn_inference repositoryai deep-learning gpu dnn ros nvidia triton deeplearning tao jetson ros2 tensorrt triton-inference-server tensorrt-inference ros2-humble |

|

|

|

isaac_ros_dnn_inference repositoryai deep-learning gpu dnn ros nvidia triton deeplearning tao jetson ros2 tensorrt triton-inference-server tensorrt-inference ros2-humble |

|

|

|

isaac_ros_dnn_inference repositoryai deep-learning gpu dnn ros nvidia triton deeplearning tao jetson ros2 tensorrt triton-inference-server tensorrt-inference ros2-humble |

|

|

|

isaac_ros_dnn_inference repositoryai deep-learning gpu dnn ros nvidia triton deeplearning tao jetson ros2 tensorrt triton-inference-server tensorrt-inference ros2-humble isaac_ros_dnn_image_encoder gxf_isaac_tensor_rt gxf_isaac_triton isaac_ros_tensor_proc isaac_ros_tensor_rt isaac_ros_triton |

|

|

Repository Summary

| Description | NVIDIA-accelerated DNN model inference ROS 2 packages using NVIDIA Triton/TensorRT for both Jetson and x86_64 with CUDA-capable GPU |

| Checkout URI | https://github.com/nvidia-isaac-ros/isaac_ros_dnn_inference.git |

| VCS Type | git |

| VCS Version | main |

| Last Updated | 2025-02-28 |

| Dev Status | UNMAINTAINED |

| CI status | No Continuous Integration |

| Released | UNRELEASED |

| Tags | ai deep-learning gpu dnn ros nvidia triton deeplearning tao jetson ros2 tensorrt triton-inference-server tensorrt-inference ros2-humble |

| Contributing |

Help Wanted (0)

Good First Issues (0) Pull Requests to Review (0) |

Packages

| Name | Version |

|---|---|

| isaac_ros_dnn_image_encoder | 3.2.5 |

| gxf_isaac_tensor_rt | 3.2.5 |

| gxf_isaac_triton | 3.2.5 |

| isaac_ros_tensor_proc | 3.2.5 |

| isaac_ros_tensor_rt | 3.2.5 |

| isaac_ros_triton | 3.2.5 |

README

Isaac ROS DNN Inference

NVIDIA-accelerated DNN model inference ROS 2 packages using NVIDIA Triton/TensorRT for both Jetson and x86_64 with CUDA-capable GPU.

Webinar Available

Learn how to use this package by watching our on-demand webinar: Accelerate YOLOv5 and Custom AI Models in ROS with NVIDIA Isaac

Overview

Isaac ROS DNN Inference contains ROS 2 packages for performing DNN inference, providing AI-based perception for robotics applications. DNN inference uses a pre-trained DNN model to ingest an input Tensor and output a prediction to an output Tensor.

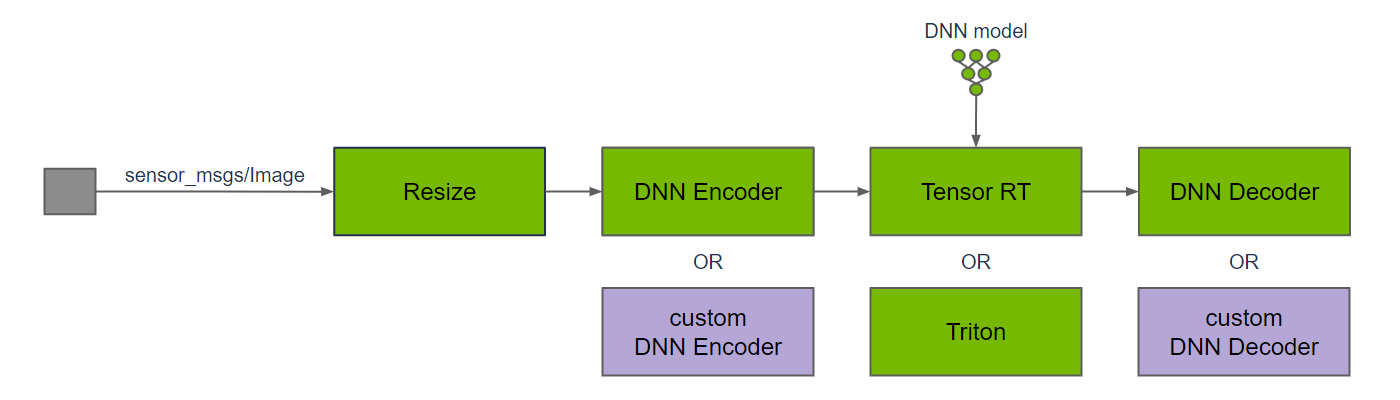

Above is a typical graph of nodes for DNN inference on image data. The input image is resized to match the input resolution of the DNN; the image resolution may be reduced to improve DNN inference performance ,which typically scales directly with the number of pixels in the image. DNN inference requires input Tensors, so a DNN encoder node is used to convert from an input image to Tensors, including any data pre-processing that is required for the DNN model. Once DNN inference is performed, the DNN decoder node is used to convert the output Tensors to results that can be used by the application.

TensorRT and Triton are two separate ROS nodes to perform DNN inference. The TensorRT node uses TensorRT to provide high-performance deep learning inference. TensorRT optimizes the DNN model for inference on the target hardware, including Jetson and discrete GPUs. It also supports specific operations that are commonly used by DNN models. For newer or bespoke DNN models, TensorRT may not support inference on the model. For these models, use the Triton node.

The Triton node uses the Triton Inference Server, which provides a compatible frontend supporting a combination of different inference backends (e.g. ONNX Runtime, TensorRT Engine Plan, TensorFlow, PyTorch). In-house benchmark results measure little difference between using TensorRT directly or configuring Triton to use TensorRT as a backend.

Some DNN models may require custom DNN encoders to convert the input data to the Tensor format needed for the model, and custom DNN decoders to convert from output Tensors into results that can be used in the application. Leverage the DNN encoder and DNN decoder node(s) for image bounding box detection and image segmentation, or your own custom node(s).

[!Note] DNN inference can be performed on different types of input data, including audio, video, text, and various sensor data, such as LIDAR, camera, and RADAR. This package provides implementations for DNN encode and DNN decode functions for images, which are commonly used for perception in robotics. The DNNs operate on Tensors for their input, output, and internal transformations, so the input image needs to be converted to a Tensor for DNN inferencing.

Isaac ROS NITROS Acceleration

This package is powered by NVIDIA Isaac Transport for ROS (NITROS), which leverages type adaptation and negotiation to optimize message formats and dramatically accelerate communication between participating nodes.

Performance

| Sample Graph |

Input Size |

AGX Orin |

Orin NX |

Orin Nano Super 8GB |

x86_64 w/ RTX 4090 |

|---|---|---|---|---|---|

|

TensorRT Node DOPE |

VGA |

30.8 fps 37 ms @ 30Hz |

15.5 fps 55 ms @ 30Hz |

20.8 fps 51 ms @ 30Hz |

298 fps 5.3 ms @ 30Hz |

|

Triton Node DOPE |

VGA |

31.2 fps 340 ms @ 30Hz |

15.5 fps 55 ms @ 30Hz |

22.2 fps 490 ms @ 30Hz |

277 fps 4.7 ms @ 30Hz |

|

TensorRT Node PeopleSemSegNet |

544p |

489 fps 4.6 ms @ 30Hz |

258 fps 7.1 ms @ 30Hz |

269 fps 6.2 ms @ 30Hz |

619 fps 2.2 ms @ 30Hz |

|

Triton Node PeopleSemSegNet |

544p |

216 fps 5.5 ms @ 30Hz |

143 fps 8.2 ms @ 30Hz |

– |

585 fps 2.5 ms @ 30Hz |

|

DNN Image Encoder Node |

VGA |

339 fps 13 ms @ 30Hz |

375 fps 12 ms @ 30Hz |

– |

480 fps 6.0 ms @ 30Hz |

Documentation

Please visit the Isaac ROS Documentation to learn how to use this repository.

Packages

Latest

Update 2024-12-10: Update to be compatible with JetPack 6.1

CONTRIBUTING

Isaac ROS Contribution Rules

Any contribution that you make to this repository will be under the Apache 2 License, as dictated by that license:

5. Submission of Contributions. Unless You explicitly state otherwise, any Contribution intentionally submitted for inclusion in the Work by You to the Licensor shall be under the terms and conditions of this License, without any additional terms or conditions. Notwithstanding the above, nothing herein shall supersede or modify the terms of any separate license agreement you may have executed with Licensor regarding such Contributions.

Contributors must sign-off each commit by adding a Signed-off-by: ...

line to commit messages to certify that they have the right to submit

the code they are contributing to the project according to the

Developer Certificate of Origin (DCO).

|

isaac_ros_dnn_inference repositoryai deep-learning gpu dnn ros nvidia triton deeplearning tao jetson ros2 tensorrt triton-inference-server tensorrt-inference ros2-humble |

|

|

|

isaac_ros_dnn_inference repositoryai deep-learning gpu dnn ros nvidia triton deeplearning tao jetson ros2 tensorrt triton-inference-server tensorrt-inference ros2-humble |

|

|

|

isaac_ros_dnn_inference repositoryai deep-learning gpu dnn ros nvidia triton deeplearning tao jetson ros2 tensorrt triton-inference-server tensorrt-inference ros2-humble |

|

|

|

isaac_ros_dnn_inference repositoryai deep-learning gpu dnn ros nvidia triton deeplearning tao jetson ros2 tensorrt triton-inference-server tensorrt-inference ros2-humble |

|

|